FunASR:多模型协同推理与语音处理全链路实践 (ASR, VAD, PUNC, SV)

本文详细介绍了 FunASR 这一基础语音识别工具包,它提供了一套完整的语音处理服务,涵盖了离线转写和实时听写两大核心功能。其技术核心在于 AutoModel 多模型协调引擎,能够将不同的组件,如语音活动检测(VAD)、自动语音识别(ASR)、标点恢复和说话人分离(SV),按序串联起来,实现复杂的音频转录任务。文档清晰展示了从原始音频输入到最终带说话人标签的转录结果的完整处理流程和数据流向。此外,本文不仅罗列了支持的多种中英文模型清单,还附带了音频格式转换指南和代码示例。最后,通过实验性能对比,文章论证了在不同硬件上,结合 VAD、PUNC 和 SV 等组件后对推理用时和处理准确性的影响。

ASR 模型综合对比表

| 模型名称 | 中文准确度 | 英文/混合识别 | 可读性 (标点) | 附加功能 | 综合评分 |

|---|---|---|---|---|---|

| Fun-ASR-Nano | 极高 | 完美 | 极佳 | 生产环境级别 | 5.0 |

| SenseVoiceSmall | 高 | 较弱 (漏失) | 较好 | 情感/事件检测 | 4.0 |

paraformer-zh (ASR) |

一般 | 极差 | 无 | 原始数据 | 2.0 |

paraformer-zh (+VAD +PUNC) |

高 | 中等 | 优秀 | 自动断句 | 4.5 |

建议:

- 如果你的场景需要极致的准确率和排版,首选 Fun-ASR-Nano。

- 如果你的场景需要分析说话人的情绪,SenseVoiceSmall 是唯一的选择。

- 对于普通的长音频转写,带标点补全的 paraformer-zh 性价比最高。

FunASR

FunASR 是一个基础语音识别工具包,提供多种功能,包括语音识别(ASR)、语音端点检测(VAD)、标点恢复、语言模型、说话人验证、说话人分离和多人对话语音识别等。

离线文件转写服务

FunASR离线文件转写软件包,提供了一款功能强大的语音离线文件转写服务。拥有完整的语音识别链路,结合了语音端点检测、语音识别、标点等模型,可以将几十个小时的长音频与视频识别成带标点的文字,而且支持上百路请求同时进行转写。输出为带标点的文字,含有字级别时间戳,支持ITN与用户自定义热词等。服务端集成有ffmpeg,支持各种音视频格式输入。软件包提供有html、python、c++、java与c#等多种编程语言客户端。

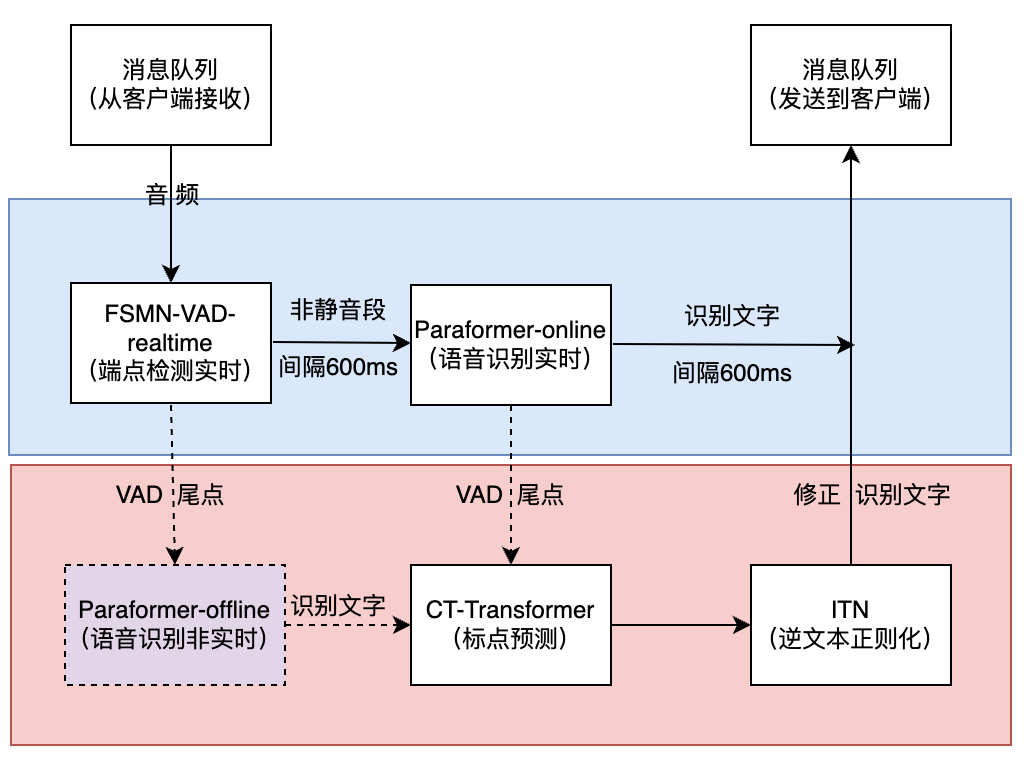

实时听写服务

FunASR实时语音听写软件包,集成了实时版本的语音端点检测模型、语音识别、语音识别、标点预测模型等。采用多模型协同,既可以实时的进行语音转文字,也可以在说话句尾用高精度转写文字修正输出,输出文字带有标点,支持多路请求。依据使用者场景不同,支持实时语音听写服务(online)、非实时一句话转写(offline)与实时与非实时一体化协同(2pass)3种服务模式。软件包提供有html、python、c++、java与c#等多种编程语言客户端。

AutoModel 代码整体架构

FunASR 的 AutoModel 类,它是一个多模型协调引擎,用于语音处理任务。主要支持以下几种模型的组合:

核心模型类型

| 模型类型 | 功能 | 用途 |

|---|---|---|

主模型 (model) |

自动语音识别(ASR) | 核心语音转文字功能 |

| VAD 模型 | 语音活动检测 | 检测音频中的语音段 |

标点模型 (punc_model) |

标点符号恢复 | 为识别文本添加标点 |

说话人模型 (spk_model) |

说话人识别/分离 | 识别不同说话人、进行说话人分离 |

多模型协作流程

初始化阶段 (__init__ 方法)

1. 构建主ASR模型

↓

2. 如果指定VAD模型 → 加载VAD模型

↓

3. 如果指定标点模型 → 加载标点模型

↓

4. 如果指定说话人模型 → 加载说话人模型 + 聚类后端

推理流程 (generate → inference_with_vad)

输入音频

↓

┌──────────────────────────────────────────┐

│ 步骤1: VAD 分段 (可选)

│ 使用VAD模型检测音频中的语音段

│ 输出: [{start_ms, end_ms}, ...]

└──────────────────────────────────────────┘

↓

┌──────────────────────────────────────────┐

│ 步骤2: ASR 识别 (主要处理)

│ 对每个VAD段进行语音识别

│ 输出: 识别文本 + 时间戳

└──────────────────────────────────────────┘

↓

┌──────────────────────────────────────────┐

│ 步骤3: 标点恢复 (可选)

│ 为识别文本添加标点符号

│ 输入: 原始文本

│ 输出: 有标点的文本 + 标点数组

└──────────────────────────────────────────┘

↓

┌──────────────────────────────────────────┐

│ 步骤4: 说话人分离/识别 (可选)

│ • 提取每个片段的说话人嵌入向量

│ • 使用聚类将相同说话人聚集

│ • 分配说话人标签

│ 输出: 带说话人信息的句子列表

└──────────────────────────────────────────┘

↓

最终输出: 完整的转录结果

关键方法解析

1️⃣ prepare_data_iterator - 数据准备

处理多种输入格式:

- 文件路径 (

.scp,.jsonl,.txt) - 音频数据 (原始样本、字节、fbank特征)

- 列表 (多个输入文件)

2️⃣ build_model - 模型构建

- 下载模型权重

- 构建 tokenizer (分词器)

- 构建 frontend (前端特征提取)

- 加载预训练权重

- 处理 FP16/BF16 精度转换

3️⃣ inference - 单个模型推理

单个批次处理流程:

数据加载 → 前向传播 → 结果收集

支持实时进度回调

计算RTF (实时因子) 等性能指标

4️⃣ inference_with_vad - 多模型协作推理

关键步骤:

# 步骤1: VAD分段

res = self.inference(input, model=self.vad_model)

# res = [{"key": "...", "value": [[start, end], ...]}]

# 步骤2: 按时长排序VAD片段并分批处理

sorted_data = sorted(data_with_index, key=lambda x: x[0][1] - x[0][0])

# 步骤3: 对每个VAD片段进行ASR

results = self.inference(speech_j, model=self.model)

# 步骤4: 如果有说话人模型,提取说话人嵌入

if self.spk_model is not None:

segments = sv_chunk(vad_segments) # 分割成更小的片段

spk_res = self.inference(speech_b, model=self.spk_model)

# 获取说话人嵌入向量

# 步骤5: 对所有VAD片段的结果进行合并

for j in range(n):

if k.startswith("timestamp"):

# 调整时间戳

result[k].extend(restored_data[j][k])

# 步骤6: 标点恢复

punc_res = self.inference(result["text"], model=self.punc_model)

# 步骤7: 说话人聚类和分配

labels = self.cb_model(spk_embedding.cpu())

sv_output = postprocess(all_segments, None, labels, spk_embedding.cpu())

distribute_spk(sentence_list, sv_output)

模型间的数据流

┌─────────────────────────────────────────────────────────┐

│ 输入音频信号

└─────────────────────────────────────────────────────────┘

↓

┌─────────────────────────────────────────────────────────┐

│ VAD模型: 音频 → 语音活动检测

│ 输出: [[0ms, 2000ms], [3000ms, 5500ms], ...]

└─────────────────────────────────────────────────────────┘

↓

┌─────────────────────────────────────────┐

│ 按时长排序优化处理顺序

└─────────────────────────────────────────┘

↓ (对每个片段)

┌─────────────────────────────────────────────────────────┐

│ ASR模型: 音频片段 → 文本 + 时间戳

│ 输出: {"text": "你好", "timestamp": [[0,0.5], ...]}

└─────────────────────────────────────────────────────────┘

↓

┌──────────────────────────────────────┐

│ 结果合并和恢复原始顺序

└──────────────────────────────────────┘

↓

┌─────────────────────────────────────────────────────────┐

│ 标点模型: 文本 → 带标点的文本

│ 输出: {"text": "你好。", "punc_array": [...]}

└─────────────────────────────────────────────────────────┘

↓

┌─────────────────────────────────────────────────────────┐

│ 说话人模型: 每个片段 → 说话人嵌入向量

│ 输出: spk_embedding (shape: [N, embedding_dim])

└─────────────────────────────────────────────────────────┘

↓

┌─────────────────────────────────────────────────────────┐

│ 聚类后端: 嵌入向量 → 说话人标签

│ 输出: labels = [speaker_id_1, speaker_id_2, ...]

└─────────────────────────────────────────────────────────┘

↓

┌─────────────────────────────────────────────────────────┐

│ 后处理: 关联说话人信息到句子

│ 最终输出: 带说话人标签的完整转录

└─────────────────────────────────────────────────────────┘

模型

(注:⭐ 表示ModelScope模型仓库,🤗 表示Huggingface模型仓库,🍀表示OpenAI模型仓库)

| 模型名字 | 任务详情 | 训练数据 | 参数量 |

|---|---|---|---|

| Fun-ASR-Nano (⭐ 🤗 ) |

语音识别,支持中文、英文与日语,其中中文支持7个方言,26个地方口音,英文与日语覆盖多地区口音,歌词识别,说唱等 | 数千万小时 | 800M |

| SenseVoiceSmall (⭐ 🤗 ) |

多种语音理解能力,涵盖了自动语音识别(ASR)、语言识别(LID)、情感识别(SER)以及音频事件检测(AED) | 400000小时,中文 | 330M |

| paraformer-zh (⭐ 🤗 ) |

语音识别,带时间戳输出,非实时 | 60000小时,中文 | 220M |

| paraformer-zh-streaming ( ⭐ 🤗 ) |

语音识别,实时 | 60000小时,中文 | 220M |

| paraformer-en ( ⭐ 🤗 ) |

语音识别,非实时 | 50000小时,英文 | 220M |

| conformer-en ( ⭐ 🤗 ) |

语音识别,非实时 | 50000小时,英文 | 220M |

| ct-punc ( ⭐ 🤗 ) |

标点恢复 | 100M,中文与英文 | 290M |

| fsmn-vad ( ⭐ 🤗 ) |

语音端点检测,实时 | 5000小时,中文与英文 | 0.4M |

| fsmn-kws ( ⭐ ) |

语音唤醒,实时 | 5000小时,中文 | 0.7M |

| fa-zh ( ⭐ 🤗 ) |

字级别时间戳预测 | 50000小时,中文 | 38M |

| cam++ ( ⭐ 🤗 ) |

说话人确认/分割 | 5000小时 | 7.2M |

| Whisper-large-v3 (⭐ 🍀 ) |

语音识别,带时间戳输出,非实时 | 多语言 | 1550 M |

| Whisper-large-v3-turbo (⭐ 🍀 ) |

语音识别,带时间戳输出,非实时 | 多语言 | 809 M |

| Qwen-Audio (⭐ 🤗 ) |

音频文本多模态大模型(预训练) | 多语言 | 8B |

| Qwen-Audio-Chat (⭐ 🤗 ) |

音频文本多模态大模型(chat版本) | 多语言 | 8B |

| emotion2vec+large (⭐ 🤗 ) |

情感识别模型 | 40000小时,4种情感类别 | 300M |

基础知识

PCM(脉冲编码调制)

PCM(Pulse Code Modulation,脉冲编码调制)是一种将模拟声音转换为数字信号的最基础、最标准的方法,它通过固定的采样率(如 16kHz)对连续声波进行均匀取样,并用固定的量化精度(如 16bit)记录每个采样点的振幅,从而形成一串线性且无压缩的原始波形数据。PCM 不包含任何编码、压缩或格式元数据,因此既能完整保留语音特征,又便于实时处理,是绝大多数语音识别(ASR)模型和实时音频系统的默认输入格式。

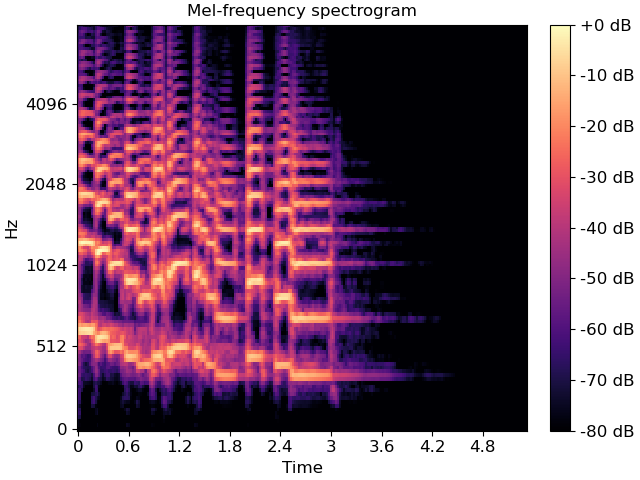

梅尔频谱图 (Mel-spectrogram)

Mel-频谱图,你可以把它想象成是声音的“照片”,但这张照片是人工智能最容易理解的版本。

简单来说:

- 频谱图 (Spectrogram):它将一段声音(比如人说话或一段音乐)分解成一张图。这张图的横轴代表时间,纵轴代表频率(也就是音高),图上的颜色或亮度代表能量(也就是音量)。

- Mel-刻度 (Mel Scale):因为人类耳朵对低音的感知比对高音的感知更精细,所以科学家设计了一个特殊的“Mel”刻度,它模仿了人耳听觉的非线性特性。

- Mel-频谱图:就是将普通的频谱图的纵轴(频率)扭曲了一下,让它更符合人耳的听觉习惯。通过这种处理,AI 模型(比如语音识别或音乐分类系统)在分析这张“照片”时,就能更关注那些人类真正在意的声音特征,从而大大提高它们理解和处理音频数据的效率和准确性。

总结:它是一种特殊的、模拟人类听觉的声学图像,是现代人工智能处理声音时的标准输入格式。

准备语音文件

| 音频文件 | 说明 |

|---|---|

| meeting.wav | 多人音频、中英文等合并的 |

| test.wav | 我录制的 荷兰... |

| asr_example.wav | 达摩院 |

| guess_age_gender.wav | English |

| output.wav | 百度飞桨 |

| blank2.wav | 2 秒空白 |

| blank4.wav | 4 秒空白 |

| kws_xiaoyunxiaoyun.wav | 小云小云 唤醒 |

| sv_example_enroll.wav | 说话人确认 |

| sv_example_different.wav | 不同说话人 |

| sv_example_same.wav | 相同说话人 |

转换音频格式(m4a -> wav)

使用 ffmpeg 将音频文件转换为 16kHz 单声道 WAV 格式:

ffmpeg -i blank2.m4a -ar 16000 -ac 1 blank2.wav

ar16000:采样率 16 kHz(ASR 常用)ac1:单声道

不加这两个参数则保持原始参数

合并音频文件

创建一个文本文件 list_meeting.txt,内容如下:

list_meeting.txt

file 'test.wav'

file 'blank4.wav'

file 'asr_example.wav'

file 'blank2.wav'

file 'guess_age_gender.wav'

file 'blank2.wav'

file 'output.wav'

file 'blank4.wav'

file 'test.wav'

然后使用以下命令合并音频文件:

ffmpeg -f concat -safe 0 -i list_meeting.txt -ar 16000 -ac 1 -sample_fmt s16 meeting.wav

模拟会议场景,包含多人语音和静音片段。

实验(性能)

- ASR:

Automatic Speech Recognition- 自动语音识别 - VAD:

Voice Activity Detection- 语音活动检测 - PUNC:

Punctuation Restoration- 标点恢复 - SV:

Speaker Verification- 说话人验证/分离

代码

- CPU

from funasr import AutoModel

model = AutoModel(

model="iic/speech_paraformer-large-vad-punc_asr_nat-zh-cn-16k-common-vocab8404-pytorch",

vad_model="iic/speech_fsmn_vad_zh-cn-16k-common-pytorch",

punc_model="iic/punc_ct-transformer_cn-en-common-vocab471067-large",

spk_model="iic/speech_campplus_sv_zh-cn_16k-common",

disable_pbar=True, # 关闭进度条

)

res = model.generate(

input="wav/meeting.wav",

)

print(res)

- MPS

from funasr import AutoModel

model = AutoModel(

model="iic/speech_paraformer-large-vad-punc_asr_nat-zh-cn-16k-common-vocab8404-pytorch",

vad_model="iic/speech_fsmn_vad_zh-cn-16k-common-pytorch",

punc_model="iic/punc_ct-transformer_cn-en-common-vocab471067-large",

spk_model="iic/speech_campplus_sv_zh-cn_16k-common",

disable_pbar=True, # 关闭进度条

device="mps",

)

res = model.generate(

input="wav/meeting.wav",

)

print(res)

性能

- 硬件:Macbook Pro M2 Max, 64GB RAM

- 音频文件长度:84.789 s

全链路性能对比

| 模型配置 | MPS 推理用时 | CPU 推理用时 | MPS vs CPU |

|---|---|---|---|

| ① 纯 ASR(paraformer-zh) | 2.636 s | 2.417 s | 0.92× 🐢 |

| ② ASR + VAD(fsmn-vad) | 1.414 s 🚀 | 2.642 s | 1.87× |

| ③ ASR + VAD + PUNC(ct-punc) | 2.072 s | 2.795 s | 1.35× |

| ④ ASR + VAD + PUNC + SV(cam++) | 4.061 s | 11.839 s | 2.92× |

ASR 模型性能对比

| 模型配置 | MPS 推理用时 | CPU 推理用时 | MPS vs CPU |

|---|---|---|---|

paraformer-zh (ASR) |

2.636 s | 2.417 s | 0.92× |

paraformer-zh (+VAD +PUNC) |

2.072 s | 2.795 s | 1.35× |

| SenseVoiceSmall | 0.420 s | 2.244 s | 5.34× |

| Fun-ASR-Nano | 4.733 s | 11.831 s | 2.50x |

① ASR

[

{

'key': 'meeting',

'text': '格 兰 发 布 了 一 份 主 题 为 宣 布 即 将 对 先 进 半 导 体 制 造 设 备 采 取 的 出 口 管 制 措 施 的 公 告 表 示 鉴 于 技 术 的 发 展 和 地 缘 政 治 的 背 景 政 府 已 经 得 出 结 论 有 必 要 扩 大 现 有 的 特 定 半 导 体 制 造 设 备 的 出 口 管 制 欢 迎 大 家 来 体 验 达 摩 院 推 出 的 语 音 识 别 模 型 i heard that you can 的 a 的 for II know my agent gender from i 欢 百 度 度 讲 深 度 的 的 表 告 发 布 了 一 份 主 题 为 宣 布 即 将 对 先 进 半 导 体 制 造 设 备 管 导 的 出 口 管 制 措 施 的 公 告 表 示 鉴 于 技 术 的 发 展 和 地 缘 政 治 的 背 景 政 府 即 将 得 出 体 导 论 必 必 要 扩 导 导 有 的 设 备 半 导 体 制 造 设 备 的 出 口 管 制',

'timestamp': [

[

990,

1230

],

[

1350,

1590

],

[

1590,

1830

],

[

1830,

2070

],

[

2070,

2310

],

[

2330,

2570

],

[

2890,

3130

],

[

3170,

3350

],

[

3350,

3590

],

[

4130,

4370

],

[

4490,

4730

],

[

4870,

5110

],

[

5110,

5350

],

[

5710,

5950

],

[

6130,

6370

],

[

6490,

6730

],

[

6790,

6990

],

[

6990,

7230

],

[

7230,

7470

],

[

7510,

7650

],

[

7650,

7890

],

[

8010,

8250

],

[

8470,

8710

],

[

8790,

9030

],

[

9090,

9330

],

[

9650,

9890

],

[

9930,

10170

],

[

10170,

10370

],

[

10370,

10610

],

[

10630,

10790

],

[

10790,

11030

],

[

11350,

11590

],

[

11730,

11890

],

[

11890,

12130

],

[

12150,

12310

],

[

12310,

12550

],

[

13190,

13430

],

[

13590,

13830

],

[

13990,

14190

],

[

14190,

14370

],

[

14370,

14590

],

[

14590,

14810

],

[

14810,

15050

],

[

15410,

15650

],

[

15690,

15930

],

[

15950,

16170

],

[

16170,

16290

],

[

16290,

16410

],

[

16410,

16610

],

[

16610,

16850

],

[

17390,

17630

],

[

17690,

17930

],

[

18030,

18250

],

[

18250,

18490

],

[

18610,

18850

],

[

18850,

19090

],

[

19230,

19390

],

[

19390,

19630

],

[

20010,

20250

],

[

20410,

20650

],

[

20670,

20910

],

[

20970,

21210

],

[

21270,

21510

],

[

21790,

22030

],

[

22110,

22350

],

[

22470,

22710

],

[

22710,

22950

],

[

23030,

23210

],

[

23210,

23450

],

[

23450,

23690

],

[

24410,

24650

],

[

24770,

24970

],

[

24970,

25150

],

[

25150,

25330

],

[

25330,

25550

],

[

25550,

25750

],

[

25750,

25990

],

[

26050,

26250

],

[

26250,

26490

],

[

31970,

32210

],

[

32250,

32490

],

[

32509,

32670

],

[

32670,

32870

],

[

32870,

33110

],

[

33270,

33510

],

[

33550,

33690

],

[

33690,

33870

],

[

33870,

34110

],

[

34170,

34370

],

[

34370,

34610

],

[

34610,

34810

],

[

34810,

35030

],

[

35030,

35270

],

[

35330,

35530

],

[

35530,

35770

],

[

35790,

35950

],

[

35950,

36190

],

[

39690,

39850

],

[

39850,

39990

],

[

39990,

40130

],

[

40130,

40250

],

[

40250,

40490

],

[

40510,

40750

],

[

40750,

41210

],

[

41210,

41450

],

[

41570,

41810

],

[

42390,

42610

],

[

42610,

42850

],

[

43370,

43490

],

[

43490,

43730

],

[

43830,

43970

],

[

43970,

45230

],

[

45230,

45430

],

[

45430,

45650

],

[

45650,

46050

],

[

46090,

46530

],

[

46630,

46770

],

[

46770,

47010

],

[

47030,

47190

],

[

47470,

47710

],

[

50790,

50970

],

[

50970,

51210

],

[

51230,

51370

],

[

51370,

51610

],

[

51750,

51850

],

[

51850,

52050

],

[

52050,

52290

],

[

52410,

52550

],

[

52550,

52790

],

[

52790,

52930

],

[

52930,

53170

],

[

53310,

53550

],

[

58570,

58810

],

[

58850,

59090

],

[

59230,

59470

],

[

59470,

59710

],

[

59710,

59950

],

[

59950,

60190

],

[

60190,

60430

],

[

60750,

60990

],

[

61030,

61210

],

[

61210,

61450

],

[

61990,

62230

],

[

62350,

62590

],

[

62730,

62970

],

[

62970,

63210

],

[

63570,

63810

],

[

64030,

64269

],

[

64349,

64590

],

[

64650,

64890

],

[

65010,

65250

],

[

65269,

65430

],

[

65430,

65670

],

[

65770,

66010

],

[

66030,

66270

],

[

66330,

66570

],

[

66650,

66890

],

[

66950,

67190

],

[

67510,

67750

],

[

67790,

68030

],

[

68030,

68230

],

[

68230,

68470

],

[

68490,

68650

],

[

68650,

68890

],

[

69250,

69490

],

[

69610,

69810

],

[

69810,

70010

],

[

70010,

70170

],

[

70170,

70410

],

[

71090,

71330

],

[

71450,

71690

],

[

71970,

72150

],

[

72150,

72370

],

[

72370,

72610

],

[

72630,

72870

],

[

72870,

73110

],

[

73270,

73510

],

[

73550,

73790

],

[

73810,

74030

],

[

74030,

74150

],

[

74150,

74310

],

[

74310,

74470

],

[

74470,

74710

],

[

75250,

75490

],

[

75550,

75790

],

[

75890,

76110

],

[

76110,

76350

],

[

76470,

76710

],

[

76710,

76950

],

[

77090,

77270

],

[

77270,

77510

],

[

78170,

78410

],

[

78450,

78690

],

[

78730,

78970

],

[

79050,

79290

],

[

79550,

79790

],

[

79790,

79970

],

[

79970,

80210

],

[

80330,

80570

],

[

80570,

80810

],

[

80890,

81070

],

[

81070,

81310

],

[

81370,

81610

],

[

82270,

82510

],

[

82630,

82830

],

[

82830,

83010

],

[

83010,

83190

],

[

83190,

83410

],

[

83410,

83610

],

[

83610,

83850

],

[

83910,

84445

]

]

}

]

② ASR + VAD

[

{

'key': 'meeting',

'text': '格 兰 发 布 了 一 份 主 题 为 宣 布 即 将 对 先 进 半 导 体 制 造 设 备 采 取 的 出 口 管 制 措 施 的 公 告 表 示 鉴 于 技 术 的 发 展 和 地 缘 政 治 的 背 景 政 府 已 经 得 出 结 论 有 必 要 扩 大 现 有 的 特 定 半 导 体 制 造 设 备 的 出 口 管 制 欢 迎 大 家 来 体 验 达 摩 院 推 出 的 语 音 识 别 模 型 i heard that you can understand what people say and even though they are age and gender so can you guess my age and gender from my voice 你 好 欢 迎 使 用 百 度 飞 桨 深 度 学 习 框 架 格 兰 发 布 了 一 份 主 题 为 宣 布 即 将 对 先 进 半 导 体 制 造 设 备 采 取 的 出 口 管 制 措 施 的 公 告 表 示 鉴 于 技 术 的 发 展 和 地 缘 政 治 的 背 景 政 府 已 经 得 出 结 论 有 必 要 扩 大 现 有 的 特 定 半 导 体 制 造 设 备 的 出 口 管 制',

'timestamp': [

[

790,

1030

],

[

1030,

1270

],

[

1410,

1650

],

[

1670,

1850

],

[

1850,

2090

],

[

2130,

2370

],

[

2390,

2630

],

[

2930,

3170

],

[

3170,

3410

],

[

3410,

3650

],

[

4170,

4410

],

[

4490,

4730

],

[

4890,

5130

],

[

5150,

5390

],

[

5690,

5930

],

[

6150,

6390

],

[

6470,

6710

],

[

6810,

7010

],

[

7010,

7190

],

[

7190,

7430

],

[

7470,

7670

],

[

7670,

7910

],

[

7990,

8230

],

[

8250,

8490

],

[

8529,

8770

],

[

8790,

9030

],

[

9090,

9330

],

[

9710,

9930

],

[

9930,

10170

],

[

10230,

10410

],

[

10410,

10610

],

[

10610,

10830

],

[

10830,

11070

],

[

11390,

11630

],

[

11750,

11910

],

[

11910,

12110

],

[

12110,

12330

],

[

12330,

12570

],

[

13170,

13410

],

[

13530,

13770

],

[

13930,

14170

],

[

14190,

14350

],

[

14350,

14570

],

[

14570,

14770

],

[

14770,

15010

],

[

15070,

15310

],

[

15410,

15650

],

[

15670,

15910

],

[

15950,

16150

],

[

16150,

16330

],

[

16330,

16430

],

[

16430,

16570

],

[

16570,

16809

],

[

17370,

17610

],

[

17650,

17890

],

[

18010,

18190

],

[

18190,

18430

],

[

18610,

18850

],

[

18850,

19090

],

[

19230,

19390

],

[

19390,

19630

],

[

19970,

20210

],

[

20370,

20570

],

[

20570,

20810

],

[

20910,

21150

],

[

21230,

21470

],

[

21670,

21890

],

[

21890,

22130

],

[

22130,

22370

],

[

22470,

22710

],

[

22730,

22970

],

[

23010,

23210

],

[

23210,

23450

],

[

23450,

23690

],

[

24390,

24630

],

[

24710,

24910

],

[

24910,

25130

],

[

25130,

25310

],

[

25310,

25470

],

[

25470,

25670

],

[

25670,

25910

],

[

25970,

26150

],

[

26150,

26565

],

[

32020,

32240

],

[

32240,

32480

],

[

32500,

32660

],

[

32660,

32900

],

[

32900,

33140

],

[

33140,

33300

],

[

33300,

33540

],

[

33620,

33780

],

[

33780,

33900

],

[

33900,

34140

],

[

34160,

34360

],

[

34360,

34600

],

[

34600,

34820

],

[

34820,

35020

],

[

35020,

35260

],

[

35300,

35540

],

[

35540,

35740

],

[

35740,

35900

],

[

35900,

36255

],

[

39490,

39690

],

[

39690,

39990

],

[

39990,

40150

],

[

40150,

40270

],

[

40270,

40430

],

[

40430,

41070

],

[

41070,

41210

],

[

41210,

41450

],

[

41570,

41810

],

[

41890,

42070

],

[

42070,

42310

],

[

42350,

42550

],

[

42550,

42710

],

[

42710,

42950

],

[

43130,

43370

],

[

43550,

43770

],

[

43770,

44190

],

[

44750,

44990

],

[

45070,

45210

],

[

45210,

45450

],

[

45450,

45770

],

[

45770,

45970

],

[

45970,

46210

],

[

46330,

46530

],

[

46530,

46930

],

[

46970,

47170

],

[

47170,

47370

],

[

47370,

47915

],

[

50150,

50330

],

[

50330,

50570

],

[

50770,

50990

],

[

50990,

51230

],

[

51230,

51390

],

[

51390,

51630

],

[

51650,

51810

],

[

51810,

51990

],

[

51990,

52150

],

[

52150,

52350

],

[

52350,

52570

],

[

52570,

52710

],

[

52710,

52850

],

[

52850,

53070

],

[

53070,

53270

],

[

53270,

53565

],

[

58640,

58880

],

[

58880,

59120

],

[

59260,

59500

],

[

59520,

59700

],

[

59700,

59940

],

[

59980,

60180

],

[

60180,

60420

],

[

60780,

61020

],

[

61020,

61260

],

[

61260,

61500

],

[

62020,

62260

],

[

62340,

62580

],

[

62740,

62980

],

[

63000,

63240

],

[

63540,

63780

],

[

64000,

64240

],

[

64320,

64560

],

[

64660,

64860

],

[

64860,

65040

],

[

65040,

65280

],

[

65320,

65520

],

[

65520,

65760

],

[

65840,

66040

],

[

66040,

66280

],

[

66379,

66620

],

[

66640,

66880

],

[

66940,

67180

],

[

67560,

67780

],

[

67780,

68020

],

[

68080,

68260

],

[

68260,

68460

],

[

68460,

68680

],

[

68680,

68920

],

[

69220,

69460

],

[

69560,

69760

],

[

69760,

69960

],

[

69960,

70120

],

[

70120,

70360

],

[

71020,

71260

],

[

71380,

71620

],

[

71780,

72020

],

[

72040,

72200

],

[

72200,

72420

],

[

72420,

72620

],

[

72620,

72860

],

[

72880,

73120

],

[

73260,

73500

],

[

73520,

73760

],

[

73800,

74000

],

[

74000,

74180

],

[

74180,

74280

],

[

74280,

74420

],

[

74420,

74659

],

[

75220,

75460

],

[

75500,

75740

],

[

75840,

76040

],

[

76040,

76280

],

[

76440,

76680

],

[

76700,

76940

],

[

77080,

77240

],

[

77240,

77480

],

[

77800,

78040

],

[

78220,

78420

],

[

78420,

78660

],

[

78760,

79000

],

[

79080,

79320

],

[

79520,

79740

],

[

79740,

79980

],

[

79980,

80220

],

[

80320,

80560

],

[

80580,

80820

],

[

80860,

81060

],

[

81060,

81300

],

[

81300,

81540

],

[

82260,

82500

],

[

82560,

82760

],

[

82760,

82980

],

[

82980,

83160

],

[

83160,

83320

],

[

83320,

83520

],

[

83520,

83760

],

[

83820,

84000

],

[

84000,

84415

]

]

}

]

③ ASR + VAD + PUNC

[

{

'key': 'meeting',

'text': '格兰发布了一份主题,为宣布即将对先进半导体制造设备采取的出口管制措施的公告表示,鉴于技术的发展和地缘政治的背景,政府已经得出结论,有必要扩大现有的特定半导体制造设备的出口管制。欢迎大家来体验达摩院推出的语音识别模型。 I heard that you can understand what people say, and even though they are age and gender. So can you guess my age and gender from my voice?你好,欢迎使用百度飞桨深度学习框架。格兰发布了一份主题。为宣布即将对先进半导体制造设备采取的出口管制措施的公告表示,鉴于技术的发展和地缘政治的背景,政府已经得出结论,有必要扩大现有的特定半导体制造设备的出口管制。',

'timestamp': [

[

790,

1030

],

[

1030,

1270

],

[

1410,

1650

],

[

1670,

1850

],

[

1850,

2090

],

[

2130,

2370

],

[

2390,

2630

],

[

2930,

3170

],

[

3170,

3410

],

[

3410,

3650

],

[

4170,

4410

],

[

4490,

4730

],

[

4890,

5130

],

[

5150,

5390

],

[

5690,

5930

],

[

6150,

6390

],

[

6470,

6710

],

[

6810,

7010

],

[

7010,

7190

],

[

7190,

7430

],

[

7470,

7670

],

[

7670,

7910

],

[

7990,

8230

],

[

8250,

8490

],

[

8529,

8770

],

[

8790,

9030

],

[

9090,

9330

],

[

9710,

9930

],

[

9930,

10170

],

[

10230,

10410

],

[

10410,

10610

],

[

10610,

10830

],

[

10830,

11070

],

[

11390,

11630

],

[

11750,

11910

],

[

11910,

12110

],

[

12110,

12330

],

[

12330,

12570

],

[

13170,

13410

],

[

13530,

13770

],

[

13930,

14170

],

[

14190,

14370

],

[

14370,

14570

],

[

14570,

14770

],

[

14770,

15010

],

[

15070,

15310

],

[

15410,

15650

],

[

15670,

15910

],

[

15950,

16150

],

[

16150,

16330

],

[

16330,

16430

],

[

16430,

16570

],

[

16570,

16809

],

[

17370,

17610

],

[

17650,

17890

],

[

18010,

18190

],

[

18190,

18430

],

[

18610,

18850

],

[

18850,

19090

],

[

19230,

19390

],

[

19390,

19630

],

[

19990,

20230

],

[

20370,

20570

],

[

20570,

20810

],

[

20910,

21150

],

[

21230,

21470

],

[

21670,

21890

],

[

21890,

22130

],

[

22130,

22370

],

[

22470,

22710

],

[

22730,

22970

],

[

23010,

23210

],

[

23210,

23450

],

[

23450,

23690

],

[

24390,

24630

],

[

24710,

24910

],

[

24910,

25130

],

[

25130,

25310

],

[

25310,

25470

],

[

25470,

25670

],

[

25670,

25910

],

[

25970,

26150

],

[

26150,

26565

],

[

32020,

32240

],

[

32240,

32480

],

[

32500,

32660

],

[

32660,

32900

],

[

32900,

33140

],

[

33140,

33300

],

[

33300,

33540

],

[

33620,

33780

],

[

33780,

33900

],

[

33900,

34140

],

[

34160,

34360

],

[

34360,

34600

],

[

34600,

34820

],

[

34820,

35020

],

[

35020,

35260

],

[

35300,

35540

],

[

35540,

35740

],

[

35740,

35900

],

[

35900,

36255

],

[

39490,

39690

],

[

39690,

39990

],

[

39990,

40150

],

[

40150,

40270

],

[

40270,

40430

],

[

40430,

41070

],

[

41070,

41210

],

[

41210,

41450

],

[

41570,

41810

],

[

41890,

42070

],

[

42070,

42310

],

[

42350,

42550

],

[

42550,

42710

],

[

42710,

42950

],

[

43130,

43370

],

[

43550,

43770

],

[

43770,

44190

],

[

44770,

45010

],

[

45070,

45210

],

[

45210,

45450

],

[

45450,

45770

],

[

45770,

45970

],

[

45970,

46210

],

[

46330,

46530

],

[

46530,

46930

],

[

46970,

47170

],

[

47170,

47370

],

[

47370,

47915

],

[

50150,

50330

],

[

50330,

50570

],

[

50770,

50990

],

[

50990,

51230

],

[

51230,

51390

],

[

51390,

51630

],

[

51650,

51810

],

[

51810,

51990

],

[

51990,

52150

],

[

52150,

52350

],

[

52350,

52570

],

[

52570,

52710

],

[

52710,

52850

],

[

52850,

53070

],

[

53070,

53270

],

[

53270,

53565

],

[

58640,

58880

],

[

58880,

59120

],

[

59260,

59500

],

[

59520,

59700

],

[

59700,

59940

],

[

59980,

60180

],

[

60180,

60420

],

[

60780,

61020

],

[

61020,

61260

],

[

61260,

61500

],

[

62020,

62260

],

[

62340,

62580

],

[

62740,

62980

],

[

63000,

63240

],

[

63540,

63780

],

[

64000,

64240

],

[

64320,

64560

],

[

64660,

64860

],

[

64860,

65040

],

[

65040,

65280

],

[

65320,

65520

],

[

65520,

65760

],

[

65840,

66040

],

[

66040,

66280

],

[

66379,

66620

],

[

66640,

66880

],

[

66940,

67180

],

[

67560,

67780

],

[

67780,

68020

],

[

68080,

68260

],

[

68260,

68460

],

[

68460,

68680

],

[

68680,

68920

],

[

69220,

69460

],

[

69560,

69760

],

[

69760,

69960

],

[

69960,

70120

],

[

70120,

70360

],

[

71020,

71260

],

[

71380,

71620

],

[

71780,

72020

],

[

72040,

72200

],

[

72200,

72420

],

[

72420,

72620

],

[

72620,

72860

],

[

72880,

73120

],

[

73260,

73500

],

[

73520,

73760

],

[

73800,

74000

],

[

74000,

74180

],

[

74180,

74280

],

[

74280,

74420

],

[

74420,

74659

],

[

75220,

75460

],

[

75500,

75740

],

[

75840,

76040

],

[

76040,

76280

],

[

76440,

76680

],

[

76700,

76940

],

[

77080,

77240

],

[

77240,

77480

],

[

77800,

78040

],

[

78220,

78420

],

[

78420,

78660

],

[

78760,

79000

],

[

79080,

79320

],

[

79520,

79740

],

[

79740,

79980

],

[

79980,

80220

],

[

80320,

80560

],

[

80580,

80820

],

[

80860,

81060

],

[

81060,

81300

],

[

81300,

81540

],

[

82260,

82500

],

[

82560,

82760

],

[

82760,

82980

],

[

82980,

83160

],

[

83160,

83320

],

[

83320,

83520

],

[

83520,

83760

],

[

83820,

84000

],

[

84000,

84415

]

]

}

]

④ ASR + VAD + PUNC + SV

[

{

'key': 'meeting',

'text': '格兰发布了一份主题,为宣布即将对先进半导体制造设备采取的出口管制措施的公告表示,鉴于技术的发展和地缘政治的背景,政府已经得出结论,有必要扩大现有的特定半导体制造设备的出口管制。欢迎大家来体验达摩院推出的语音识别模型。 I heard that you can understand what people say, and even though they are age and gender. So can you guess my age and gender from my voice?你好,欢迎使用百度飞桨深度学习框架。格兰发布了一份主题。为宣布即将对先进半导体制造设备采取的出口管制措施的公告表示,鉴于技术的发展和地缘政治的背景,政府已经得出结论,有必要扩大现有的特定半导体制造设备的出口管制。',

'timestamp': [

[

790,

1030

],

[

1030,

1270

],

[

1410,

1650

],

[

1670,

1850

],

[

1850,

2090

],

[

2130,

2370

],

[

2370,

2610

],

[

2930,

3170

],

[

3170,

3410

],

[

3410,

3650

],

[

4170,

4410

],

[

4490,

4730

],

[

4890,

5130

],

[

5150,

5390

],

[

5690,

5930

],

[

6150,

6390

],

[

6470,

6710

],

[

6810,

7010

],

[

7010,

7190

],

[

7190,

7430

],

[

7470,

7670

],

[

7670,

7910

],

[

7990,

8230

],

[

8250,

8490

],

[

8529,

8770

],

[

8790,

9030

],

[

9090,

9330

],

[

9710,

9930

],

[

9930,

10170

],

[

10230,

10410

],

[

10410,

10610

],

[

10610,

10830

],

[

10830,

11070

],

[

11390,

11630

],

[

11750,

11910

],

[

11910,

12110

],

[

12110,

12330

],

[

12330,

12570

],

[

13170,

13410

],

[

13530,

13770

],

[

13930,

14170

],

[

14190,

14370

],

[

14370,

14570

],

[

14570,

14770

],

[

14770,

15010

],

[

15070,

15310

],

[

15410,

15650

],

[

15670,

15910

],

[

15950,

16150

],

[

16150,

16330

],

[

16330,

16430

],

[

16430,

16570

],

[

16570,

16809

],

[

17370,

17610

],

[

17650,

17890

],

[

18010,

18190

],

[

18190,

18430

],

[

18610,

18850

],

[

18850,

19090

],

[

19230,

19390

],

[

19390,

19630

],

[

19970,

20210

],

[

20370,

20570

],

[

20570,

20810

],

[

20910,

21150

],

[

21230,

21470

],

[

21670,

21890

],

[

21890,

22130

],

[

22130,

22370

],

[

22470,

22710

],

[

22730,

22970

],

[

23010,

23210

],

[

23210,

23450

],

[

23450,

23690

],

[

24390,

24630

],

[

24710,

24910

],

[

24910,

25130

],

[

25130,

25310

],

[

25310,

25470

],

[

25470,

25670

],

[

25670,

25910

],

[

25970,

26150

],

[

26150,

26565

],

[

32020,

32240

],

[

32240,

32480

],

[

32500,

32660

],

[

32660,

32900

],

[

32900,

33140

],

[

33140,

33300

],

[

33300,

33540

],

[

33620,

33780

],

[

33780,

33900

],

[

33900,

34140

],

[

34160,

34360

],

[

34360,

34600

],

[

34600,

34820

],

[

34820,

35020

],

[

35020,

35260

],

[

35300,

35540

],

[

35540,

35740

],

[

35740,

35900

],

[

35900,

36255

],

[

39490,

39690

],

[

39690,

39990

],

[

39990,

40150

],

[

40150,

40270

],

[

40270,

40430

],

[

40430,

41070

],

[

41070,

41210

],

[

41210,

41450

],

[

41570,

41810

],

[

41890,

42070

],

[

42070,

42310

],

[

42350,

42550

],

[

42550,

42710

],

[

42710,

42950

],

[

43130,

43370

],

[

43550,

43770

],

[

43770,

44190

],

[

44770,

45010

],

[

45070,

45210

],

[

45210,

45450

],

[

45450,

45770

],

[

45770,

45970

],

[

45970,

46210

],

[

46330,

46530

],

[

46530,

46930

],

[

46970,

47170

],

[

47170,

47370

],

[

47370,

47915

],

[

50150,

50330

],

[

50330,

50570

],

[

50770,

50990

],

[

50990,

51230

],

[

51230,

51390

],

[

51390,

51630

],

[

51650,

51810

],

[

51810,

51990

],

[

51990,

52150

],

[

52150,

52350

],

[

52350,

52570

],

[

52570,

52710

],

[

52710,

52850

],

[

52850,

53070

],

[

53070,

53270

],

[

53270,

53565

],

[

58640,

58880

],

[

58880,

59120

],

[

59260,

59500

],

[

59520,

59700

],

[

59700,

59940

],

[

59980,

60180

],

[

60180,

60420

],

[

60780,

61020

],

[

61020,

61260

],

[

61260,

61500

],

[

62020,

62260

],

[

62340,

62580

],

[

62740,

62980

],

[

63000,

63240

],

[

63540,

63780

],

[

64000,

64240

],

[

64320,

64560

],

[

64660,

64860

],

[

64860,

65040

],

[

65040,

65280

],

[

65320,

65520

],

[

65520,

65760

],

[

65840,

66040

],

[

66040,

66280

],

[

66379,

66620

],

[

66640,

66880

],

[

66940,

67180

],

[

67560,

67780

],

[

67780,

68020

],

[

68080,

68260

],

[

68260,

68460

],

[

68460,

68680

],

[

68680,

68920

],

[

69220,

69460

],

[

69560,

69760

],

[

69760,

69960

],

[

69960,

70120

],

[

70120,

70360

],

[

71020,

71260

],

[

71380,

71620

],

[

71780,

72020

],

[

72040,

72200

],

[

72200,

72420

],

[

72420,

72620

],

[

72620,

72860

],

[

72880,

73120

],

[

73260,

73500

],

[

73520,

73760

],

[

73800,

74000

],

[

74000,

74180

],

[

74180,

74280

],

[

74280,

74420

],

[

74420,

74659

],

[

75220,

75460

],

[

75500,

75740

],

[

75840,

76040

],

[

76040,

76280

],

[

76440,

76680

],

[

76700,

76940

],

[

77080,

77240

],

[

77240,

77480

],

[

77800,

78040

],

[

78220,

78420

],

[

78420,

78660

],

[

78760,

79000

],

[

79080,

79320

],

[

79520,

79740

],

[

79740,

79980

],

[

79980,

80220

],

[

80320,

80560

],

[

80580,

80820

],

[

80860,

81060

],

[

81060,

81300

],

[

81300,

81540

],

[

82260,

82500

],

[

82560,

82760

],

[

82760,

82980

],

[

82980,

83160

],

[

83160,

83320

],

[

83320,

83520

],

[

83520,

83760

],

[

83820,

84000

],

[

84000,

84415

]

],

'sentence_info': [

{

'text': '格兰发布了一份主题,',

'start': 790,

'end': 3410,

'timestamp': [

[

790,

1030

],

[

1030,

1270

],

[

1410,

1650

],

[

1670,

1850

],

[

1850,

2090

],

[

2130,

2370

],

[

2370,

2610

],

[

2930,

3170

],

[

3170,

3410

]

],

'spk': 0

},

{

'text': '为宣布即将对先进半导体制造设备采取的出口管制措施的公告表示,',

'start': 3410,

'end': 12570,

'timestamp': [

[

3410,

3650

],

[

4170,

4410

],

[

4490,

4730

],

[

4890,

5130

],

[

5150,

5390

],

[

5690,

5930

],

[

6150,

6390

],

[

6470,

6710

],

[

6810,

7010

],

[

7010,

7190

],

[

7190,

7430

],

[

7470,

7670

],

[

7670,

7910

],

[

7990,

8230

],

[

8250,

8490

],

[

8529,

8770

],

[

8790,

9030

],

[

9090,

9330

],

[

9710,

9930

],

[

9930,

10170

],

[

10230,

10410

],

[

10410,

10610

],

[

10610,

10830

],

[

10830,

11070

],

[

11390,

11630

],

[

11750,

11910

],

[

11910,

12110

],

[

12110,

12330

],

[

12330,

12570

]

],

'spk': 0

},

{

'text': '鉴于技术的发展和地缘政治的背景,',

'start': 13170,

'end': 16809,

'timestamp': [

[

13170,

13410

],

[

13530,

13770

],

[

13930,

14170

],

[

14190,

14370

],

[

14370,

14570

],

[

14570,

14770

],

[

14770,

15010

],

[

15070,

15310

],

[

15410,

15650

],

[

15670,

15910

],

[

15950,

16150

],

[

16150,

16330

],

[

16330,

16430

],

[

16430,

16570

],

[

16570,

16809

]

],

'spk': 0

},

{

'text': '政府已经得出结论,',

'start': 17370,

'end': 19630,

'timestamp': [

[

17370,

17610

],

[

17650,

17890

],

[

18010,

18190

],

[

18190,

18430

],

[

18610,

18850

],

[

18850,

19090

],

[

19230,

19390

],

[

19390,

19630

]

],

'spk': 0

},

{

'text': '有必要扩大现有的特定半导体制造设备的出口管制。',

'start': 19970,

'end': 26565,

'timestamp': [

[

19970,

20210

],

[

20370,

20570

],

[

20570,

20810

],

[

20910,

21150

],

[

21230,

21470

],

[

21670,

21890

],

[

21890,

22130

],

[

22130,

22370

],

[

22470,

22710

],

[

22730,

22970

],

[

23010,

23210

],

[

23210,

23450

],

[

23450,

23690

],

[

24390,

24630

],

[

24710,

24910

],

[

24910,

25130

],

[

25130,

25310

],

[

25310,

25470

],

[

25470,

25670

],

[

25670,

25910

],

[

25970,

26150

],

[

26150,

26565

]

],

'spk': 0

},

{

'text': '欢迎大家来体验达摩院推出的语音识别模型。',

'start': 32020,

'end': 36255,

'timestamp': [

[

32020,

32240

],

[

32240,

32480

],

[

32500,

32660

],

[

32660,

32900

],

[

32900,

33140

],

[

33140,

33300

],

[

33300,

33540

],

[

33620,

33780

],

[

33780,

33900

],

[

33900,

34140

],

[

34160,

34360

],

[

34360,

34600

],

[

34600,

34820

],

[

34820,

35020

],

[

35020,

35260

],

[

35300,

35540

],

[

35540,

35740

],

[

35740,

35900

],

[

35900,

36255

]

],

'spk': 1

},

{

'text': ' i heard that you can understand what people say,',

'start': 39490,

'end': 41810,

'timestamp': [

[

39490,

39690

],

[

39690,

39990

],

[

39990,

40150

],

[

40150,

40270

],

[

40270,

40430

],

[

40430,

41070

],

[

41070,

41210

],

[

41210,

41450

],

[

41570,

41810

]

],

'spk': 2

},

{

'text': ' and even though they are age and gender。',

'start': 41890,

'end': 44190,

'timestamp': [

[

41890,

42070

],

[

42070,

42310

],

[

42350,

42550

],

[

42550,

42710

],

[

42710,

42950

],

[

43130,

43370

],

[

43550,

43770

],

[

43770,

44190

]

],

'spk': 2

},

{

'text': ' so can you guess my age and gender from my voice?',

'start': 44770,

'end': 47915,

'timestamp': [

[

44770,

45010

],

[

45070,

45210

],

[

45210,

45450

],

[

45450,

45770

],

[

45770,

45970

],

[

45970,

46210

],

[

46330,

46530

],

[

46530,

46930

],

[

46970,

47170

],

[

47170,

47370

],

[

47370,

47915

]

],

'spk': 2

},

{

'text': '你好,',

'start': 50150,

'end': 50570,

'timestamp': [

[

50150,

50330

],

[

50330,

50570

]

],

'spk': 3

},

{

'text': '欢迎使用百度飞桨深度学习框架。',

'start': 50770,

'end': 53565,

'timestamp': [

[

50770,

50990

],

[

50990,

51230

],

[

51230,

51390

],

[

51390,

51630

],

[

51650,

51810

],

[

51810,

51990

],

[

51990,

52150

],

[

52150,

52350

],

[

52350,

52570

],

[

52570,

52710

],

[

52710,

52850

],

[

52850,

53070

],

[

53070,

53270

],

[

53270,

53565

]

],

'spk': 3

},

{

'text': '格兰发布了一份主题。',

'start': 58640,

'end': 61260,

'timestamp': [

[

58640,

58880

],

[

58880,

59120

],

[

59260,

59500

],

[

59520,

59700

],

[

59700,

59940

],

[

59980,

60180

],

[

60180,

60420

],

[

60780,

61020

],

[

61020,

61260

]

],

'spk': 0

},

{

'text': '为宣布即将对先进半导体制造设备采取的出口管制措施的公告表示,',

'start': 61260,

'end': 70360,

'timestamp': [

[

61260,

61500

],

[

62020,

62260

],

[

62340,

62580

],

[

62740,

62980

],

[

63000,

63240

],

[

63540,

63780

],

[

64000,

64240

],

[

64320,

64560

],

[

64660,

64860

],

[

64860,

65040

],

[

65040,

65280

],

[

65320,

65520

],

[

65520,

65760

],

[

65840,

66040

],

[

66040,

66280

],

[

66379,

66620

],

[

66640,

66880

],

[

66940,

67180

],

[

67560,

67780

],

[

67780,

68020

],

[

68080,

68260

],

[

68260,

68460

],

[

68460,

68680

],

[

68680,

68920

],

[

69220,

69460

],

[

69560,

69760

],

[

69760,

69960

],

[

69960,

70120

],

[

70120,

70360

]

],

'spk': 0

},

{

'text': '鉴于技术的发展和地缘政治的背景,',

'start': 71020,

'end': 74659,

'timestamp': [

[

71020,

71260

],

[

71380,

71620

],

[

71780,

72020

],

[

72040,

72200

],

[

72200,

72420

],

[

72420,

72620

],

[

72620,

72860

],

[

72880,

73120

],

[

73260,

73500

],

[

73520,

73760

],

[

73800,

74000

],

[

74000,

74180

],

[

74180,

74280

],

[

74280,

74420

],

[

74420,

74659

]

],

'spk': 0

},

{

'text': '政府已经得出结论,',

'start': 75220,

'end': 77480,

'timestamp': [

[

75220,

75460

],

[

75500,

75740

],

[

75840,

76040

],

[

76040,

76280

],

[

76440,

76680

],

[

76700,

76940

],

[

77080,

77240

],

[

77240,

77480

]

],

'spk': 0

},

{

'text': '有必要扩大现有的特定半导体制造设备的出口管制。',

'start': 77800,

'end': 84415,

'timestamp': [

[

77800,

78040

],

[

78220,

78420

],

[

78420,

78660

],

[

78760,

79000

],

[

79080,

79320

],

[

79520,

79740

],

[

79740,

79980

],

[

79980,

80220

],

[

80320,

80560

],

[

80580,

80820

],

[

80860,

81060

],

[

81060,

81300

],

[

81300,

81540

],

[

82260,

82500

],

[

82560,

82760

],

[

82760,

82980

],

[

82980,

83160

],

[

83160,

83320

],

[

83320,

83520

],

[

83520,

83760

],

[

83820,

84000

],

[

84000,

84415

]

],

'spk': 0

}

]

}

]

实时模型

ASR

- iic/speech_paraformer-large_asr_nat-zh-cn-16k-common-vocab8404-online

- paraformer-zh-streaming

import soundfile

import os

from funasr import AutoModel

# 注:`chunk_size`为流式延时配置,`[0,10,5]`表示上屏实时出字粒度为`10*60=600ms`,未来信息为`5*60=300ms`。

# 每次推理输入为`600ms`(采样点数为`16000*0.6=960`),输出为对应文字,最后一个语音片段输入需要设置`is_final=True`来强制输出最后一个字。

chunk_size = [0, 10, 5] #[0, 10, 5] 600ms, [0, 8, 4] 480ms

encoder_chunk_look_back = 4 #number of chunks to lookback for encoder self-attention

decoder_chunk_look_back = 1 #number of encoder chunks to lookback for decoder cross-attention

model = AutoModel(

model="paraformer-zh-streaming",

device="mps",

disable_pbar=True,

)

wav_file = "wav/meeting.wav"

speech, sample_rate = soundfile.read(wav_file)

chunk_stride = chunk_size[1] * 960 # 600ms

cache = {}

for start in range(0, len(speech), chunk_stride):

end = start + chunk_stride

speech_chunk = speech[start : end]

# 是否是最后一个 chunk(end >= 总长度)

is_final = end >= len(speech)

res = model.generate(

input=speech_chunk,

cache=cache,

is_final=is_final,

chunk_size=chunk_size,

encoder_chunk_look_back=encoder_chunk_look_back,

decoder_chunk_look_back=decoder_chunk_look_back,

)

print(res)

[{'key': 'rand_key_2yW4Acq9GFz6Y', 'text': ''}]

[{'key': 'rand_key_1t9EwL56nGisi', 'text': ''}]

[{'key': 'rand_key_WgNZq6ITZM5jt', 'text': '格兰'}]

[{'key': 'rand_key_gUe52RvEJgwBu', 'text': '发布了'}]

[{'key': 'rand_key_NO6n9JEC3HqdZ', 'text': '一份'}]

[{'key': 'rand_key_6J6afU1zT0YQO', 'text': '主'}]

[{'key': 'rand_key_aNF03vpUuT3em', 'text': '题为'}]

[{'key': 'rand_key_6KopZ9jZICffu', 'text': '宣'}]

[{'key': 'rand_key_4G7FgtJsThJv0', 'text': '布即'}]

[{'key': 'rand_key_7In9ZMJLsCfMZ', 'text': '将'}]

[{'key': 'rand_key_yuKpslm0lcNQq', 'text': '对'}]

[{'key': 'rand_key_EefRWi4j7c1f5', 'text': '先进'}]

[{'key': 'rand_key_S71IRz1THrHZp', 'text': '半导体'}]

[{'key': 'rand_key_2n5RL08ALrCFQ', 'text': '制造'}]

[{'key': 'rand_key_PS6YwfuNhFLOv', 'text': '设备采'}]

[{'key': 'rand_key_2mpbUrhToxYkv', 'text': '取的'}]

[{'key': 'rand_key_B0dgYj2Soc0KO', 'text': '出'}]

[{'key': 'rand_key_67IMRebhOmM1K', 'text': '口管'}]

[{'key': 'rand_key_hzfx1hcMbm9lT', 'text': '制措施'}]

[{'key': 'rand_key_hEnUZd7RbIBNg', 'text': '的'}]

[{'key': 'rand_key_1qeoePtwBldGD', 'text': '公告表'}]

[{'key': 'rand_key_cMgSzmqw5UE15', 'text': '示'}]

[{'key': 'rand_key_6KkSRn9XdYRk2', 'text': '鉴'}]

[{'key': 'rand_key_kvHqhM6CQlaER', 'text': '于技'}]

[{'key': 'rand_key_A5K3G6tPwy1Qq', 'text': '术的发'}]

[{'key': 'rand_key_jJSaDVfvVcIri', 'text': '展和'}]

[{'key': 'rand_key_pW9EwNsRwL85O', 'text': '地缘'}]

[{'key': 'rand_key_2NiTtyVA1PfaM', 'text': '政治的'}]

[{'key': 'rand_key_mSvkpoOCyT4RK', 'text': '背景'}]

[{'key': 'rand_key_3Acz3SKAXQTck', 'text': '政'}]

[{'key': 'rand_key_CeqSkCH4F6JMW', 'text': '府已'}]

[{'key': 'rand_key_a4cFut1DdZ04Z', 'text': '经得'}]

[{'key': 'rand_key_AmJ9Of1Uiaz8R', 'text': '出结论'}]

[{'key': 'rand_key_AuanaTWa0RHNg', 'text': ''}]

[{'key': 'rand_key_mhMPm3trSlgE2', 'text': '有必'}]

[{'key': 'rand_key_7zOfbr6CZYh3q', 'text': '要扩'}]

[{'key': 'rand_key_iPH0PPwh3jr2b', 'text': '大现'}]

[{'key': 'rand_key_ccnRqJu8x8K62', 'text': '有的'}]

[{'key': 'rand_key_c2V9SMPFTP5DO', 'text': '特定'}]

[{'key': 'rand_key_Bx3IlnyLsaijr', 'text': '半导体'}]

[{'key': 'rand_key_vvYxTfvXNccrk', 'text': ''}]

[{'key': 'rand_key_jLsxzJishEUp7', 'text': '制造'}]

[{'key': 'rand_key_dtl2HUetz1vtA', 'text': '设备的'}]

[{'key': 'rand_key_ggJ6EEv1ZZvhE', 'text': '出口管'}]

[{'key': 'rand_key_hSFBctvVR5j6k', 'text': '制'}]

[{'key': 'rand_key_OKyZOfeZfmVod', 'text': ''}]

[{'key': 'rand_key_yagzJHsC6FYLT', 'text': ''}]

[{'key': 'rand_key_RnBfxoqLXkBmw', 'text': ''}]

[{'key': 'rand_key_he032Sb5HCWRm', 'text': ''}]

[{'key': 'rand_key_hFzqnPc8ZnNjg', 'text': ''}]

[{'key': 'rand_key_mDyxI0jgMFjKz', 'text': ''}]

[{'key': 'rand_key_ORB4HFR64u1FF', 'text': ''}]

[{'key': 'rand_key_OQ3mIN7oavT9V', 'text': ''}]

[{'key': 'rand_key_5u0ucHj3qMYj1', 'text': '欢'}]

[{'key': 'rand_key_yLsTTZEeZfH36', 'text': '迎大'}]

[{'key': 'rand_key_ceoictaW2Cv3k', 'text': '家来体'}]

[{'key': 'rand_key_Zj3PD9xGy5HGc', 'text': '验达摩'}]

[{'key': 'rand_key_KfRYZHWMeVB5W', 'text': '院推出'}]

[{'key': 'rand_key_nsI5MA0E2YyML', 'text': '的语'}]

[{'key': 'rand_key_o23ZbQ4aVltGK', 'text': '音识别'}]

[{'key': 'rand_key_qveF3q80tXAyZ', 'text': '模型'}]

[{'key': 'rand_key_ydkP7ipsU1vd8', 'text': ''}]

[{'key': 'rand_key_cEAjF43MTfRSj', 'text': ''}]

[{'key': 'rand_key_ZwAcNDyDdgEXj', 'text': ''}]

[{'key': 'rand_key_bcMNiOugSJPwm', 'text': ''}]

[{'key': 'rand_key_yYXXFh4dNSDNO', 'text': ''}]

[{'key': 'rand_key_7vPhRTNsY2i96', 'text': 'i'}]

[{'key': 'rand_key_yZs6V39RZhH3Y', 'text': 'ard that you can'}]

[{'key': 'rand_key_mcYzCxWmDwYOe', 'text': 'stand'}]

[{'key': 'rand_key_9c57cFq5b8HQK', 'text': 'what people'}]

[{'key': 'rand_key_K4nofX044OXGS', 'text': 'say and even'}]

[{'key': 'rand_key_HAGt8hjB5KB7f', 'text': 'know they'}]

[{'key': 'rand_key_7gAegAXjU9bYC', 'text': 'are'}]

[{'key': 'rand_key_BRAbF639uUqfw', 'text': 'age and'}]

[{'key': 'rand_key_ehwSbwwla1o0x', 'text': ''}]

[{'key': 'rand_key_eM5jnanQRU85h', 'text': 'der so can'}]

[{'key': 'rand_key_VasxSb7Mo2jlD', 'text': 'you guess'}]

[{'key': 'rand_key_hEwT9qib9nxvE', 'text': 'my age'}]

[{'key': 'rand_key_9ss749JOulLfg', 'text': 'and gender'}]

[{'key': 'rand_key_ILtky5j8iZouG', 'text': 'from my'}]

[{'key': 'rand_key_ppWlsxAQc3iMb', 'text': ''}]

[{'key': 'rand_key_zeSeiAtJAV7jL', 'text': ''}]

[{'key': 'rand_key_BtOwfpCOxO8Hd', 'text': ''}]

[{'key': 'rand_key_yAaA9U5uCnxs8', 'text': 'ice'}]

[{'key': 'rand_key_Ef8lYgrhJMSjY', 'text': '你好'}]

[{'key': 'rand_key_SC7zlXABlp9Dv', 'text': '欢迎'}]

[{'key': 'rand_key_7HjwDOOfEWnsa', 'text': '使用百'}]

[{'key': 'rand_key_1SCNDanthXOtI', 'text': '度飞桨'}]

[{'key': 'rand_key_MjBTWEfRFW9oI', 'text': '深度学习'}]

[{'key': 'rand_key_WzrObhr4Qcaqz', 'text': '框'}]

[{'key': 'rand_key_H4LTzCgVqws3W', 'text': ''}]

[{'key': 'rand_key_R6mMfceYqtIvh', 'text': ''}]

[{'key': 'rand_key_H3p56WkeA3ssH', 'text': ''}]

[{'key': 'rand_key_iKHOnIgA9OIzV', 'text': ''}]

[{'key': 'rand_key_X5YrsCxKOikhS', 'text': ''}]

[{'key': 'rand_key_hyzLDiJQtwOEV', 'text': ''}]

[{'key': 'rand_key_AnEFSGuFP9dCt', 'text': ''}]

[{'key': 'rand_key_jVFd4NnbwEz4a', 'text': ''}]

[{'key': 'rand_key_2HeR3fR8V7Qza', 'text': '架格'}]

[{'key': 'rand_key_xchNar3OSsU5o', 'text': '兰发'}]

[{'key': 'rand_key_jWKsmgBDTvykv', 'text': '布了一'}]

[{'key': 'rand_key_AP5RBjC7TjHui', 'text': '份主'}]

[{'key': 'rand_key_n6lCwYyBZFyUo', 'text': '题为'}]

[{'key': 'rand_key_YmC8nLTd5ycoO', 'text': ''}]

[{'key': 'rand_key_f3lxdVORloNtN', 'text': '宣布'}]

[{'key': 'rand_key_fT3GWsX44wADd', 'text': '即将'}]

[{'key': 'rand_key_OSHQ9P9J7V7BL', 'text': '对'}]

[{'key': 'rand_key_DFqTEnvrccdkw', 'text': '先进'}]

[{'key': 'rand_key_asPaieYBRoMzJ', 'text': '半导'}]

[{'key': 'rand_key_6oDmvMgM4fYuu', 'text': '体制造'}]

[{'key': 'rand_key_ID5uqbHcmxfn3', 'text': '设备'}]

[{'key': 'rand_key_Hwm1mqRUVttH3', 'text': '采取'}]

[{'key': 'rand_key_yqEw3Tpct7Jea', 'text': '的'}]

[{'key': 'rand_key_DFUCd6ZAFDChf', 'text': '出口'}]

[{'key': 'rand_key_fpg0WjA46nCNe', 'text': '管制措'}]

[{'key': 'rand_key_0BJW41zc9lpFo', 'text': '施'}]

[{'key': 'rand_key_i13r7wuBgJ5sN', 'text': '的公告'}]

[{'key': 'rand_key_IYmTsXCGMDIOq', 'text': '表示'}]

[{'key': 'rand_key_robhNYTglUApn', 'text': ''}]

[{'key': 'rand_key_s4VQaVIGB2dhy', 'text': '鉴于'}]

[{'key': 'rand_key_PrhVKwoRTTIQs', 'text': '技术的'}]

[{'key': 'rand_key_oV15peHtRuoxO', 'text': '发展'}]

[{'key': 'rand_key_EsLkiZaJGuxLO', 'text': '和地'}]

[{'key': 'rand_key_bZi4zjlGeiWnY', 'text': '缘政治'}]

[{'key': 'rand_key_6XFKXSnpUi0oW', 'text': '的背景'}]

[{'key': 'rand_key_ywMLiOF56gN1b', 'text': ''}]

[{'key': 'rand_key_HMwFDta9oJPkQ', 'text': '政府'}]

[{'key': 'rand_key_4FZ9VEIuT2fqi', 'text': '已经'}]

[{'key': 'rand_key_MzTm0uYsy8dnc', 'text': '得出'}]

[{'key': 'rand_key_uUV9pv3CQUQQo', 'text': '结论'}]

[{'key': 'rand_key_qwQktbwKIdUOj', 'text': '有'}]

[{'key': 'rand_key_wbFOdbpcao8Pu', 'text': '必要'}]

[{'key': 'rand_key_e0dwQBi57nCBj', 'text': '扩大'}]

[{'key': 'rand_key_wtlPvUYVAyaA5', 'text': '现有'}]

[{'key': 'rand_key_qIIZVRTDWcK6h', 'text': '的特'}]

[{'key': 'rand_key_A9ykaGiN3QG1U', 'text': '定半导'}]

[{'key': 'rand_key_Sjfvp110lp8b9', 'text': '体'}]

[{'key': 'rand_key_Z5kVY1RJkTfB7', 'text': '制'}]

[{'key': 'rand_key_3MgNODTjNMcqv', 'text': '造设备'}]

[{'key': 'rand_key_ZVUybO74cFfws', 'text': '的出'}]

[{'key': 'rand_key_QjDpGwkVWzvrZ', 'text': '口管制'}]

[{'key': 'rand_key_F8zatH5sJEcXI', 'text': ''}]

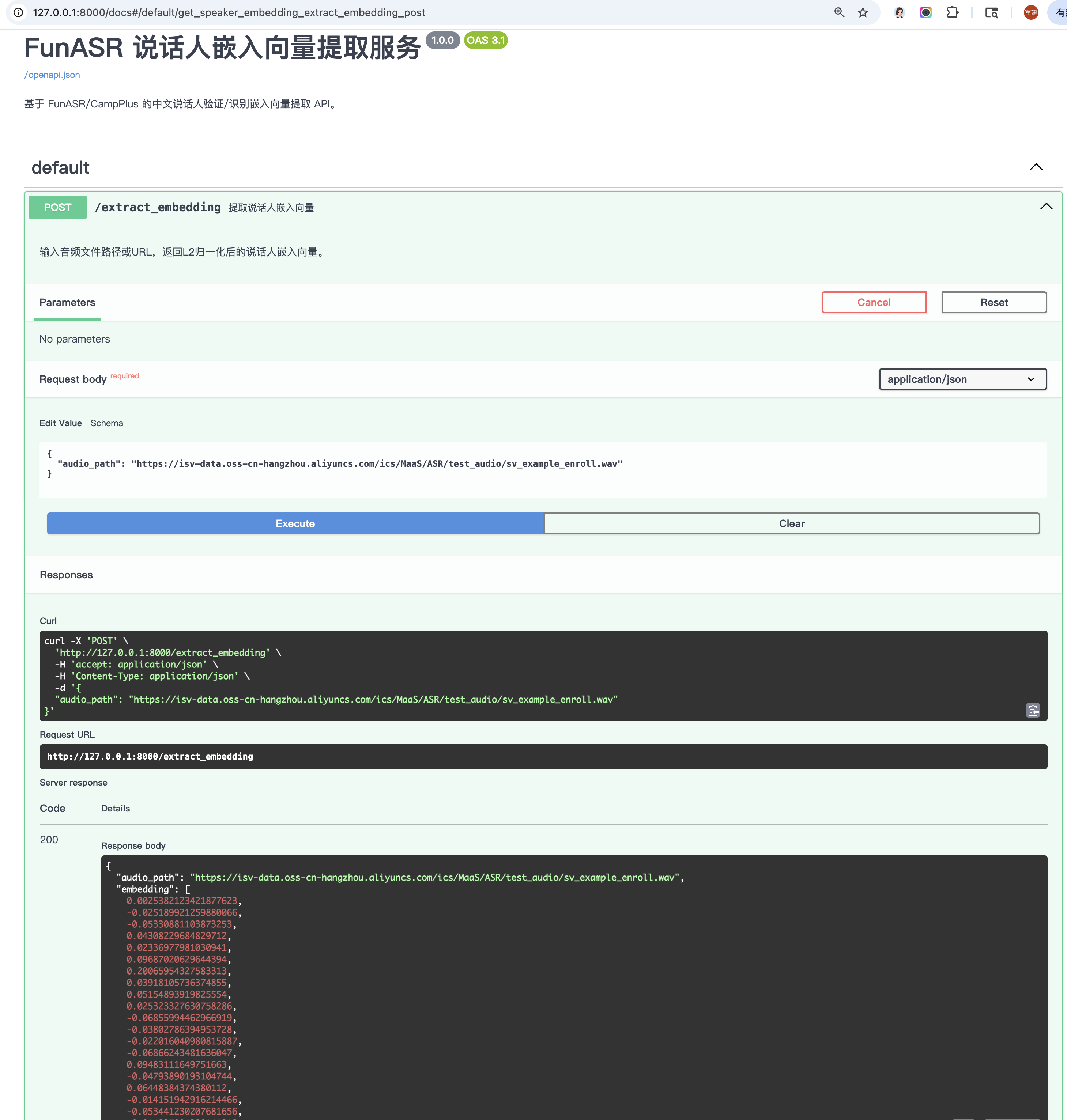

VAD (fsmn-vad)

from funasr import AutoModel

import soundfile as sf

# 流式 VAD chunk 大小(毫秒)

chunk_size = 200 # e.g. 200ms

# 加载 VAD 模型

model = AutoModel(

model="fsmn-vad",

device="mps",

disable_pbar=True,

)

# 读取测试 wav

wav_file = "wav/meeting.wav"

speech, sample_rate = sf.read(wav_file)

# 如果是多通道(双声道),自动转单通道

if speech.ndim > 1:

speech = speech.mean(axis=1)

# 计算每个 chunk 对应的采样点数

chunk_stride = int(chunk_size * sample_rate / 1000)

if chunk_stride <= 0:

raise ValueError("chunk_stride must be > 0")

# 流式缓存

cache = {}

for start in range(0, len(speech), chunk_stride):

end = start + chunk_stride

speech_chunk = speech[start:end]

# 是否为最后一个 chunk

is_final = end >= len(speech)

# 推理(实时 VAD)

res = model.generate(

input=speech_chunk,

cache=cache,

is_final=is_final,

chunk_size=chunk_size, # 单位为 ms

)

if len(res[0]["value"]):

print(res)

[{'key': 'rand_key_NO6n9JEC3HqdZ', 'value': [[500, -1]]}]

[{'key': 'rand_key_Z5kVY1RJkTfB7', 'value': [[-1, 26910]]}]

[{'key': 'rand_key_uftbFaWqmWzyB', 'value': [[31730, -1]]}]

[{'key': 'rand_key_4ORzcopOsvk9p', 'value': [[-1, 36550]]}]

[{'key': 'rand_key_qlULusyOcsJBc', 'value': [[39220, -1]]}]

[{'key': 'rand_key_DyRmAFmkzdY8Z', 'value': [[-1, 48160]]}]

[{'key': 'rand_key_7z8DTIn5xTcbz', 'value': [[49880, -1]]}]

[{'key': 'rand_key_O2re3QuuJPsdY', 'value': [[-1, 53790]]}]

[{'key': 'rand_key_D6PHdYUm9qDSe', 'value': [[58350, -1]]}]

[{'key': 'rand_key_tpKSfjMz2X9xV', 'value': [[-1, 84770]]}]

离线模型

语音识别

Fun-ASR-Nano-2512

不需要搭配标点恢复模型(

PUNC);可以和端点检测模型(VAD)一块使用;不可以和说话人分离模型(SV)一块使用。

- 安装更新 FunASR 库

pip install -U funasr torch torchaudio

- 下载

model.py文件wget https://raw.githubusercontent.com/FunAudioLLM/Fun-ASR/refs/heads/main/model.py

from funasr import AutoModel

# 中文、英文、日文 for Fun-ASR-Nano-2512

# 中文、英文、粤语、日文、韩文、越南语、印尼语、泰语、马来语、菲律宾语、阿拉伯语、

# 印地语、保加利亚语、克罗地亚语、捷克语、丹麦语、荷兰语、爱沙尼亚语、芬兰语、希腊语、

# 匈牙利语、爱尔兰语、拉脱维亚语、立陶宛语、马耳他语、波兰语、葡萄牙语、罗马尼亚语、

# 斯洛伐克语、斯洛文尼亚语、瑞典语 for Fun-ASR-MLT-Nano-2512

model_dir = "FunAudioLLM/Fun-ASR-Nano-2512"

model = AutoModel(

model=model_dir,

device="mps",

disable_update=True,

disable_pbar=True,

trust_remote_code=True,

remote_code="./model.py",

)

wav_path = "meeting.wav"

res = model.generate(

input=[wav_path],

cache={},

batch_size=1,

)

text = res[0]["text"]

print(text)

参数说明:

model_dir: 模型名称或本地磁盘上的模型路径。trust_remote_code: 是否信任远程代码以加载自定义模型实现。remote_code: 指定特定模型代码的位置(例如当前目录中的model.py),支持绝对路径和相对路径。device: 指定使用的设备,如 “cuda:0”、”cpu” 或 “mps”。

格兰发布了一份主题为“宣布即将对先进半导体制造设备采取的出口管制”措施的公告,表示,鉴于技术的发展和地缘政治的背景,政府已经得出结论,有必要扩大现有的特定半导体制造设备的出口管制。欢迎大家来体验达摩院推出的语音识别模型。I heard that you can understand what people say and even know their age and gender,so can you guess my age and gender from my voice?你好,欢迎使用百度飞桨深度学习框架。格兰发布了一份主题为“宣布即将对先进半导体制造设备采取的出口管制”措施的公告,表示,鉴于技术的发展和地缘政治的背景,政府已经得出结论,有必要扩大现有的特定半导体制造设备的出口管制。

from funasr import AutoModel

model_dir = "FunAudioLLM/Fun-ASR-Nano-2512"

model = AutoModel(

model=model_dir,

device="mps",

disable_update=True,

disable_pbar=True,

trust_remote_code=True,

remote_code="./model.py",

)

wav_path = "meeting.wav"

res = model.generate(

input=[wav_path],

cache={},

batch_size=1,

hotwords=["荷兰"],

language="中文",

itn=True, # or False

)

text = res[0]["text"]

print(text)