Prompt Engineering with Llama 2

Prompt Engineering Techniques(提示工程技术)

In-Context Learning (上下文学习)

Standard prompt with instruction (标准提示与指令)

- So far, you have been stating the instruction explicitly in the prompt: (到目前为止,您一直在明确在提示中陈述指令:)

prompt = """

What is the sentiment of:

Hi Amit, thanks for the thoughtful birthday card!

"""

response = llama(prompt)

print(response)

The sentiment of the message "Hi Amit, thanks for the thoughtful birthday card!" is positive. The use of the word "thoughtful" implies that the sender appreciated the effort put into the card, and the tone is friendly and sincere.

中文

prompt = """

消息的情感是什么:

嗨,阿米特,谢谢你那份富有思想的生日卡!

"""

response = llama(prompt)

print(response)

消息“嗨,阿米特,谢谢你那份富有思想的生日卡!”的情感是积极的。使用“富有思想”一词意味着发件人对卡片中的努力表示赞赏,语气友好真诚。

Zero-shot Prompting (零样本提示)

- Here is an example of zero-shot prompting. (这是一个零样本提示的例子。)

- You are prompting the model to see if it can infer the task from the structure of your prompt. (您正在提示模型,看它是否可以从提示的结构中推断出任务。)

- In zero-shot prompting, you only provide the structure to the model, but without any examples of the completed task. (在零样本提示中,您只向模型提供结构,而没有提供任何完成任务的示例。)

prompt = """

Message: Hi Amit, thanks for the thoughtful birthday card!

Sentiment: ?

"""

response = llama(prompt)

print(response)

The sentiment of the message is "Appreciation" or "Gratitude". The sender is expressing their appreciation for the birthday card that Amit sent.

中文

prompt = """

消息:嗨,阿米特,谢谢你那份富有思想的生日卡!

情感:?

"""

response = llama(prompt)

print(response)

消息的情感是“感激”或“感恩”。发件人表达了对阿米特寄来的生日卡的感激之情。

Few-shot Prompting (少样本提示)

- Here is an example of few-shot prompting. (这是一个少样本提示的例子。)

- In few-shot prompting, you not only provide the structure to the model, but also two or more examples. (在少样本提示中,您不仅向模型提供结构,还提供两个或更多示例。)

- You are prompting the model to see if it can infer the task from the structure, as well as the examples in your prompt. (您正在提示模型,看它是否可以从结构和提示中的示例推断出任务。)

prompt = """

Message: Hi Dad, you're 20 minutes late to my piano recital!

Sentiment: Negative

Message: Can't wait to order pizza for dinner tonight

Sentiment: Positive

Message: Hi Amit, thanks for the thoughtful birthday card!

Sentiment: ?

"""

response = llama(prompt)

print(response)

Sure, here are the sentiments for each message:

1. Message: Hi Dad, you're 20 minutes late to my piano recital!

Sentiment: Negative

2. Message: Can't wait to order pizza for dinner tonight

Sentiment: Positive

3. Message: Hi Amit, thanks for the thoughtful birthday card!

Sentiment: Positive

中文

prompt = """

消息:嗨,爸爸,你迟到了20分钟来参加我的钢琴演奏会!

情感:消极

消息:今晚等不及要点披萨吃晚饭!

情感:积极

消息:嗨,阿米特,谢谢你那份富有思想的生日卡!

情感:?

"""

response = llama(prompt)

print(response)

当然可以!这里是每条消息的情感:

1. 消息:嗨,爸爸,你迟到了20分钟来参加我的钢琴演奏会!

情感:消极

2. 消息:今晚等不及要点披萨吃晚饭!

情感:积极

3. 消息:嗨,阿米特,谢谢你那份富有思想的生日卡!

情感:积极

Specifying the Output Format (指定输出格式)

- You can also specify the format in which you want the model to respond. (您还可以指定模型响应的格式。)

- In the example below, you are asking to “give a one word response”. (在下面的示例中,您要求“给出一个词的回答”。)

prompt = """

Message: Hi Dad, you're 20 minutes late to my piano recital!

Sentiment: Negative

Message: Can't wait to order pizza for dinner tonight

Sentiment: Positive

Message: Hi Amit, thanks for the thoughtful birthday card!

Sentiment: ?

Give a one word response.

"""

response = llama(prompt)

print(response)

Sure! Here are the one-word responses for each message:

1. Negative: Disappointed

2. Positive: Excited

3. ? (Uncertain): Grateful

Note: For all the examples above, you used the 7 billion parameter model, llama-2-7b-chat. And as you saw in the last example, the 7B model was uncertain about the sentiment. (对于上面的所有示例,您使用了70亿参数模型llama-2-7b-chat。正如您在最后一个示例中看到的,7B模型对情感感到不确定。)

- You can use the larger (70 billion parameter)

llama-2-70b-chatmodel to see if you get a better, certain response: (您可以使用更大(700亿参数)的llama-2-70b-chat模型,看看是否可以获得更好、更确定的响应:)

llama-2-13b-chat 也不能给出理想的回答。

prompt = """

Message: Hi Dad, you're 20 minutes late to my piano recital!

Sentiment: Negative

Message: Can't wait to order pizza for dinner tonight

Sentiment: Positive

Message: Hi Amit, thanks for the thoughtful birthday card!

Sentiment: ?

Give a one word response.

"""

response = llama(prompt, model="togethercomputer/llama-2-70b-chat")

print(response)

Positive

- Now, use the smaller model again, but adjust your prompt in order to help the model to understand what is being expected from it. (现在,再次使用较小的模型,但调整您的提示,以帮助模型理解预期的任务。)

- Restrict the model’s output format to choose from

positive,negativeorneutral. (限制模型的输出格式,可以选择positive、negative或neutral。)

prompt = """

Message: Hi Dad, you're 20 minutes late to my piano recital!

Sentiment: Negative

Message: Can't wait to order pizza for dinner tonight

Sentiment: Positive

Message: Hi Amit, thanks for the thoughtful birthday card!

Sentiment:

Respond with either positive, negative, or neutral.

"""

response = llama(prompt)

print(response)

Sure, I'd be happy to help! Here are my responses:

Message: Hi Dad, you're 20 minutes late to my piano recital!

Sentiment: Negative

Message: Can't wait to order pizza for dinner tonight

Sentiment: Positive

Message: Hi Amit, thanks for the thoughtful birthday card!

Sentiment: Positive

这是使用 llama-2-7b-chat 调整后可以输出理解结果的提示词。

prompt = """

Give a one word response.

Respond with either positive, negative, or neutral.

Message: Hi Dad, you're 20 minutes late to my piano recital!

Sentiment: Negative

Message: Can't wait to order pizza for dinner tonight

Sentiment: Positive

Message: Hi Amit, thanks for the thoughtful birthday card!

Sentiment: """

response = llama(prompt)

print(response)

Positive

零样本也行

prompt = """

Give a one word response.

Respond with either positive, negative, or neutral.

Message: Hi Amit, thanks for the thoughtful birthday card!

Sentiment: """

response = llama(prompt)

print(response)

Positive

Role Prompting (角色提示)

- Roles give context to LLMs what type of answers are desired. (角色为LLMs提供了答案类型的上下文。)

- Llama 2 often gives more consistent responses when provided with a role. (当提供角色时,Llama 2通常会给出更一致的响应。)

- First, try standard prompt and see the response. (首先,尝试标准提示并查看响应。)

prompt = """

How can I answer this question from my friend:

What is the meaning of life?

"""

response = llama(prompt)

print(response)

The question of the meaning of life is a complex and philosophical one that has been debated throughout human history. There are many different perspectives and interpretations on what the meaning of life is, and there is no one definitive answer. However, here are some possible ways to approach this question:

1. Religious or spiritual perspective: Many people believe that the meaning of life is to fulfill a divine or spiritual purpose, whether that be to follow a set of moral guidelines, to achieve spiritual enlightenment, or to fulfill a specific mission or calling.

2. Personal fulfillment: Some people believe that the meaning of life is to find personal fulfillment and happiness, whether that be through relationships, career, hobbies, or other activities.

3. Social or cultural perspective: From a social or cultural perspective, the meaning of life may be tied to the values and beliefs of one's community or society. For example, some cultures place a strong emphasis on family and community, while others prioritize individualism and personal freedom.

4. Existentialist perspective: Existentialist philosophers argue that the meaning of life is not predetermined or inherent, but rather something that each individual must create for themselves through their choices and experiences.

5. Humanistic perspective: From a humanistic perspective, the meaning of life may be tied to the development and fulfillment of one's human potential, whether that be through personal growth, creativity, or contributions to society.

6. Biological perspective: Some people believe that the meaning of life is tied to the survival and reproduction of the species, and that the purpose of life is to perpetuate the human race.

7. Epistemological perspective: From an epistemological perspective, the meaning of life may be tied to the pursuit of knowledge and understanding, and the quest for truth and wisdom.

8. Ethical perspective: Some people believe that the meaning of life is tied to ethical principles and values, such as compassion, empathy, and fairness.

9. Hedonistic perspective: From a hedonistic perspective, the meaning of life is tied to the pursuit of pleasure and enjoyment, and the avoidance of pain and suffering.

10. Nihilistic perspective: A nihilistic perspective on the meaning of life is that there is no inherent meaning or purpose, and that life is ultimately meaningless and absurd.

Ultimately, the meaning of life is a deeply personal and subjective question that each individual must answer for themselves. It may depend on their values, beliefs, experiences, and circumstances, and it may change throughout their life as they grow and evolve as a person.

中文

prompt = """

我该如何回答我朋友的这个问题:

生命的意义是什么?

"""

response = llama(prompt)

print(response)

生命的意义是一个复杂且哲学性的问题,它在人类历史上一直存在争议。关于生命的意义有许多不同的观点和解释,没有一个明确的答案。然而,以下是一些可能的思考方式:

1. 宗教或精神角度:许多人认为生命的意义是实现神圣或精神目的,无论是遵循一套道德准则,达到精神启蒙,还是完成特定的使命或召唤。

2. 个人成就:一些人认为生命的意义在于找到个人的成就和幸福,无论是通过人际关系、职业、爱好还是其他活动。

3. 社会或文化角度:从社会或文化角度来看,生命的意义可能与一个人所在社区或社会的价值观和信仰相关。例如,有些文化非常强调家庭和社区,而其他文化则优先个人主义和个人自由。

4. 存在主义角度:存在主义哲学家认为生命的意义不是预先确定或固有的,而是每个人通过选择和经验自己创造的。

5. 人本主义角度:从人本主义的角度来看,生命的意义可能与一个人的人类潜力的发展和实现相关,无论是通过个人成长、创造力还是对社会的贡献。

6. 生物学角度:一些人认为生命的意义与物种的生存和繁衍有关,生命的目的是延续人类。

7. 认识论角度:从认识论的角度来看,生命的意义可能与追求知识和理解,以及追求真理和智慧有关。

8. 伦理学角度:一些人认为生命的意义与伦理原则和价值观有关,如同情、共情和公平。

9. 享乐主义角度:从享乐主义的角度来看,生命的意义与追求快乐和享受,以及避免痛苦和苦难有关。

10. 虚无主义角度:关于生命的意义的虚无主义观点是,生命没有固有的意义或目的,生命最终是毫无意义和荒谬的。

最终,生命的意义是一个深层次的个人和主观问题,每个人都必须为自己回答。它可能取决于他们的价值观、信仰、经验和环境,并且随着他们作为个人的成长和发展而在生命中变化。

- Now, try it by giving the model a “role”, and within the role, a “tone” using which it should respond with. (现在,尝试给模型一个“角色”,在角色中,使用它应该回应的“语气”。)

role = """

Your role is a life coach \

who gives advice to people about living a good life.\

You attempt to provide unbiased advice.

You respond in the tone of an English pirate.

"""

prompt = f"""

{role}

How can I answer this question from my friend:

What is the meaning of life?

"""

response = llama(prompt)

print(response)

Shiver me timbers! Yer lookin' fer the meaning o' life, eh? Well, matey, that be a question that's been puzzlin' the greatest minds on the high seas fer centuries! *adjusts eye patch*

Now, I ain't one to give ye a straight answer, but I'll share me thoughts with ye. The meaning o' life, me hearty, be different fer each and every one o' us. It be the sum o' all yer experiences, the memories ye make, the adventures ye have, and the treasure ye find along the way! *winks*

Ye see, life be a great big ocean, and ye be a ship sailin' through it. Ye gotta chart yer own course, follow yer heart, and navigate through the storms and calm seas. The meaning o' life be findin' yer own treasure, me matey! *adjusts hat*

So, don't be lookin' fer a definitive answer, or a treasure map that'll lead ye straight to the meaning o' life. It be a journey, a adventure, a treasure hunt, if ye will! *winks*

Now, go forth and find yer own treasure, me hearty! And remember, the meaning o' life be whatever ye make it! *adjusts eye patch*

中文

role = """

您的角色是一位生活教练,向人们提供关于过好生活的建议。您试图提供公正的建议。

您用英国海盗的语气回应。

"""

prompt = f"""

{role}

我该如何回答我朋友的这个问题:

生命的意义是什么?

"""

response = llama(prompt)

print(response)

我的船长!你在寻找生命的意义,是吗?嗯,伙计,这是一个几个世纪以来一直困扰着最伟大的头脑的问题!*调整眼罩*

现在,我不是一个直接回答你的人,但我会和你分享我的想法。生命的意义,我的心肝,对于我们每个人来说都是不同的。它是你所有经历的总和,你所创造的记忆,你所经历的冒险,以及你沿途找到的宝藏!*眨眼*

你看,生活就像一片辽阔的海洋,而你就是在其中航行的船只。你必须规划自己的航线,跟随你的内心,穿越风暴和平静的海洋。生命的意义就是找到你自己的宝藏,我的伙计!*调整帽子*

所以,不要寻找一个明确的答案,或者一张能直接带你找到生命的意义的宝藏地图。这是一段旅程,一次冒险,一次寻宝,如果你愿意的话!*眨眼*

现在,前去寻找你自己的宝藏,我的心肝!记住,生命的意义就是你自己创造的!*调整眼罩*

Summarization

- Summarizing a large text is another common use case for LLMs. Let’s try that! (总结大文本是LLMs的另一个常见用例。让我们试试!)

email = """

Dear Amit,

An increasing variety of large language models (LLMs) are open source, or close to it. The proliferation of models with relatively permissive licenses gives developers more options for building applications.

Here are some different ways to build applications based on LLMs, in increasing order of cost/complexity:

Prompting. Giving a pretrained LLM instructions lets you build a prototype in minutes or hours without a training set. Earlier this year, I saw a lot of people start experimenting with prompting, and that momentum continues unabated. Several of our short courses teach best practices for this approach.

One-shot or few-shot prompting. In addition to a prompt, giving the LLM a handful of examples of how to carry out a task — the input and the desired output — sometimes yields better results.

Fine-tuning. An LLM that has been pretrained on a lot of text can be fine-tuned to your task by training it further on a small dataset of your own. The tools for fine-tuning are maturing, making it accessible to more developers.

Pretraining. Pretraining your own LLM from scratch takes a lot of resources, so very few teams do it. In addition to general-purpose models pretrained on diverse topics, this approach has led to specialized models like BloombergGPT, which knows about finance, and Med-PaLM 2, which is focused on medicine.

For most teams, I recommend starting with prompting, since that allows you to get an application working quickly. If you’re unsatisfied with the quality of the output, ease into the more complex techniques gradually. Start one-shot or few-shot prompting with a handful of examples. If that doesn’t work well enough, perhaps use RAG (retrieval augmented generation) to further improve prompts with key information the LLM needs to generate high-quality outputs. If that still doesn’t deliver the performance you want, then try fine-tuning — but this represents a significantly greater level of complexity and may require hundreds or thousands more examples. To gain an in-depth understanding of these options, I highly recommend the course Generative AI with Large Language Models, created by AWS and DeepLearning.AI.

(Fun fact: A member of the DeepLearning.AI team has been trying to fine-tune Llama-2-7B to sound like me. I wonder if my job is at risk? 😜)

Additional complexity arises if you want to move to fine-tuning after prompting a proprietary model, such as GPT-4, that’s not available for fine-tuning. Is fine-tuning a much smaller model likely to yield superior results than prompting a larger, more capable model? The answer often depends on your application. If your goal is to change the style of an LLM’s output, then fine-tuning a smaller model can work well. However, if your application has been prompting GPT-4 to perform complex reasoning — in which GPT-4 surpasses current open models — it can be difficult to fine-tune a smaller model to deliver superior results.

Beyond choosing a development approach, it’s also necessary to choose a specific model. Smaller models require less processing power and work well for many applications, but larger models tend to have more knowledge about the world and better reasoning ability. I’ll talk about how to make this choice in a future letter.

Keep learning!

Andrew

"""

prompt = f"""

Summarize this email and extract some key points.

What did the author say about llama models?:

email: {email}

"""

response = llama(prompt)

print(response)

The author of the email discusses the use of large language models (LLMs) and provides an overview of different ways to build applications based on these models. Here are some key points extracted from the email:

1. Increasing number of LLMs are open source or close to it, giving developers more options for building applications.

2. Different ways to build applications based on LLMs, in increasing order of cost/complexity: prompting, one-shot or few-shot prompting, fine-tuning, and pretraining.

3. Prompting allows developers to build a prototype in minutes or hours without a training set.

4. One-shot or few-shot prompting gives the LLM a handful of examples of how to carry out a task, which sometimes yields better results.

5. Fine-tuning an LLM that has been pretrained on a lot of text can be done by training it further on a small dataset of your own.

6. Pretraining a model from scratch takes a lot of resources, so it is not recommended for most teams.

7. Specialized models like BloombergGPT and Med-PaLM 2 have been developed for specific domains like finance and medicine.

8. The author recommends starting with prompting and gradually moving to more complex techniques based on the performance of the output.

9. Fine-tuning a smaller model can work well for changing the style of an LLM's output, but it may be difficult to fine-tune a smaller model to deliver superior results if the application requires complex reasoning.

10. The choice of a specific model depends on the application, with smaller models requiring less processing power and larger models having more knowledge about the world and better reasoning ability.

The author also mentions that a member of the DeepLearning.AI team has been trying to fine-tune a model called Llama-2-7B to sound like them, which they find amusing.

Providing New Information in the Prompt (在提示中提供新信息)

- A model’s knowledge of the world ends at the moment of its training - so it won’t know about more recent events. (模型对世界的了解在训练时结束 - 因此它不会知道更近期的事件。)

- Llama 2 was released for research and commercial use on July 18, 2023, and its training ended some time before that date. (Llama 2于2023年7月18日发布,用于研究和商业用途,其训练在此日期之前结束。)

- Ask the model about an event, in this case, FIFA Women’s World Cup 2023, which started on July 20, 2023, and see how the model responses. (询问模型有关事件的信息,例如,2023年7月20日开始的FIFA女子世界杯,看看模型的响应。)

prompt = """

Who won the 2023 Women's World Cup?

"""

response = llama(prompt)

print(response)

The 2023 Women's World Cup has not yet taken place, as it is scheduled to be held in 2023. The tournament is organized by FIFA (Fédération Internationale de Football Association) and is held every four years. The winner of the 2023 Women's World Cup will be determined through a series of matches played by the participating teams, and the final match is scheduled to take place in July 2023.

中文

prompt = """

谁赢得了2023年女子世界杯?

"""

response = llama(prompt)

print(response)

2023年女子世界杯尚未举行,因为计划于2023年举行。该比赛由国际足球联合会(FIFA)组织,每四年举行一次。2023年女子世界杯的冠军将通过参赛队伍进行的一系列比赛来决定,决赛定于2023年7月举行。

- As you can see, the model still thinks that the tournament is yet to be played, even though you are now in 2024! (正如您所看到的,即使您现在是在2024年,模型仍然认为比赛尚未开始!)

-

Another thing to note is, July 18, 2023 was the date the model was released to public, and it was trained even before that, so it only has information upto that point. The response says, “the final match is scheduled to take place in July 2023”, but the final match was played on August 20, 2023. (另一个需要注意的是,2023年7月18日是模型向公众发布的日期,甚至在那之前就进行了训练,因此它只有到那个时候的信息。响应中说,“决赛定于2023年7月举行”,但实际上决赛是在2023年8月20日举行的。)

- You can provide the model with information about recent events, in this case text from Wikipedia about the 2023 Women’s World Cup. (您可以向模型提供有关最近事件的信息,例如来自维基百科的2023年女子世界杯的文本。)

context = """

The 2023 FIFA Women's World Cup (Māori: Ipu Wahine o te Ao FIFA i 2023)[1] was the ninth edition of the FIFA Women's World Cup, the quadrennial international women's football championship contested by women's national teams and organised by FIFA. The tournament, which took place from 20 July to 20 August 2023, was jointly hosted by Australia and New Zealand.[2][3][4] It was the first FIFA Women's World Cup with more than one host nation, as well as the first World Cup to be held across multiple confederations, as Australia is in the Asian confederation, while New Zealand is in the Oceanian confederation. It was also the first Women's World Cup to be held in the Southern Hemisphere.[5]

This tournament was the first to feature an expanded format of 32 teams from the previous 24, replicating the format used for the men's World Cup from 1998 to 2022.[2] The opening match was won by co-host New Zealand, beating Norway at Eden Park in Auckland on 20 July 2023 and achieving their first Women's World Cup victory.[6]

Spain were crowned champions after defeating reigning European champions England 1–0 in the final. It was the first time a European nation had won the Women's World Cup since 2007 and Spain's first title, although their victory was marred by the Rubiales affair.[7][8][9] Spain became the second nation to win both the women's and men's World Cup since Germany in the 2003 edition.[10] In addition, they became the first nation to concurrently hold the FIFA women's U-17, U-20, and senior World Cups.[11] Sweden would claim their fourth bronze medal at the Women's World Cup while co-host Australia achieved their best placing yet, finishing fourth.[12] Japanese player Hinata Miyazawa won the Golden Boot scoring five goals throughout the tournament. Spanish player Aitana Bonmatí was voted the tournament's best player, winning the Golden Ball, whilst Bonmatí's teammate Salma Paralluelo was awarded the Young Player Award. England goalkeeper Mary Earps won the Golden Glove, awarded to the best-performing goalkeeper of the tournament.

Of the eight teams making their first appearance, Morocco were the only one to advance to the round of 16 (where they lost to France; coincidentally, the result of this fixture was similar to the men's World Cup in Qatar, where France defeated Morocco in the semi-final). The United States were the two-time defending champions,[13] but were eliminated in the round of 16 by Sweden, the first time the team had not made the semi-finals at the tournament, and the first time the defending champions failed to progress to the quarter-finals.[14]

Australia's team, nicknamed the Matildas, performed better than expected, and the event saw many Australians unite to support them.[15][16][17] The Matildas, who beat France to make the semi-finals for the first time, saw record numbers of fans watching their games, their 3–1 loss to England becoming the most watched television broadcast in Australian history, with an average viewership of 7.13 million and a peak viewership of 11.15 million viewers.[18]

It was the most attended edition of the competition ever held.

"""

prompt = f"""

Given the following context, who won the 2023 Women's World cup?

context: {context}

"""

response = llama(prompt)

print(response)

Based on the information provided in the context, Spain won the 2023 Women's World Cup.

中文

context = """

2023年FIFA女子世界杯(毛利语:Ipu Wahine o te Ao FIFA i 2023)是FIFA女子世界杯的第九届比赛,这是由FIFA组织的四年一度的国际女子足球锦标赛,由女子国家队参加。这项比赛于2023年7月20日至8月20日举行,由澳大利亚和新西兰联合主办。这是第一届FIFA女子世界杯,有多个东道主国,也是第一次跨多个大陆举办的世界杯,因为澳大利亚属于亚洲大陆,而新西兰属于大洋洲大陆。这也是第一次在南半球举办女子世界杯。

这次比赛是第一次采用32支球队的扩展赛制,比赛前的24支球队,复制了1998年至2022年男子世界杯的赛制。联合东道主新西兰在2023年7月20日在奥克兰的伊甸公园击败挪威赢得了开幕比赛,并取得了他们的第一次女子世界杯胜利。

西班牙在决赛中以1-0击败卫冕欧洲冠军英格兰,获得冠军。这是自2007年以来欧洲国家首次赢得女子世界杯,也是西班牙的第一个冠军,尽管他们的胜利被鲁比亚莱斯事件所蒙上阴影。西班牙成为继2003年德国之后第二个同时赢得女子和男子世界杯的国家。此外,他们成为第一个同时举办FIFA女子U-17、U-20和成年世界杯的国家。瑞典将在女子世界杯上获得第四枚铜牌,而联合东道主澳大利亚取得了迄今为止的最佳成绩,获得了第四名。日本球员宫泽日向在整个比赛中攻入五球,赢得金靴奖。西班牙球员阿伊塔纳·邦马蒂被评为比赛最佳球员,赢得金球奖,而邦马蒂的队友萨尔玛·帕拉鲁洛获得了最佳年轻球员奖。英格兰门将玛丽·厄普斯赢得了金手套奖,该奖项授予比赛中表现最佳的门将。

八支首次亮相的球队中,摩洛哥是唯一一支晋级16强的球队(他们在16强赛中输给了法国;巧合的是,这场比赛的结果与卡塔尔男子世界杯的结果相似,法国在半决赛中击败了摩洛哥)。美国是两届卫冕冠军,但在16强赛中被瑞典淘汰,这是该队首次未能进入半决赛,也是卫冕冠军首次未能晋级四分之一决赛。

澳大利亚队,绰号“马蒂尔达”,表现出乎意料地好,这一事件看到许多澳大利亚人团结起来支持他们。马蒂尔达队击败法国,首次晋级半决赛,看到创纪录的球迷观看他们的比赛,他们对英格兰的3-1失利成为澳大利亚历史上收视率最高的电视广播,平均收视人数为713万,最高收视人数为1115万。

这是有史以来参加人数最多的比赛。

"""

prompt = f"""

根据以下背景信息,谁赢得了2023年女子世界杯?

context: {context}

"""

response = llama(prompt)

print(response)

根据上下文提供的信息,西班牙赢得了2023年女子世界杯。

自己试试

context = """

<paste context in here>

"""

query = "<your query here>"

prompt = f"""

Given the following context,

{query}

context: {context}

"""

response = llama(prompt,

verbose=True)

print(response)

Chain-of-thought Prompting (思维链提示)

- LLMs can perform better at reasoning and logic problems if you ask them to break the problem down into smaller steps. This is known as chain-of-thought prompting. (如果您要求LLMs将问题分解为较小的步骤,它们在推理和逻辑问题上的表现会更好。这被称为思维链提示。)

prompt = """

15 of us want to go to a restaurant.

Two of them have cars

Each car can seat 5 people.

Two of us have motorcycles.

Each motorcycle can fit 2 people.

Can we all get to the restaurant by car or motorcycle?

"""

response = llama(prompt)

print(response)

Yes, all 15 people can get to the restaurant by car or motorcycle.

Here's how:

* Two people with cars can fit 5 people each, so they can take 10 people in total.

* Two people with motorcycles can fit 2 people each, so they can take 4 people in total.

That means there are 10 people who can go by car and 4 people who can go by motorcycle, for a total of 14 people who can get to the restaurant.

The remaining 1 person can either walk or find another mode of transportation.

中文

prompt = """

我们15个人想去一家餐馆。

其中两人有车

每辆车可以坐5个人。

我们中有两人有摩托车。

每辆摩托车可以坐2个人。

我们所有人都可以乘车或摩托车去餐馆吗?

"""

response = llama(prompt)

print(response)

是的,所有15个人都可以乘车或摩托车去餐馆。

方法如下:

* 两个有车的人可以每人坐5个人,所以他们总共可以带10个人。

* 两个有摩托车的人可以每人坐2个人,所以他们总共可以带4个人。

这意味着有10个人可以乘车,4个人可以乘摩托车,总共可以有14个人到达餐馆。

剩下的1个人可以步行或找其他交通方式。

- Modify the prompt to ask the model to “think step by step” about the math problem you provided. (修改提示,要求模型“逐步思考”您提供的数学问题。)

prompt = """

15 of us want to go to a restaurant.

Two of them have cars

Each car can seat 5 people.

Two of us have motorcycles.

Each motorcycle can fit 2 people.

Can we all get to the restaurant by car or motorcycle?

Think step by step.

"""

response = llama(prompt)

print(response)

Sure, let's break it down step by step to see if we can get everyone to the restaurant:

1. Number of people who want to go to the restaurant: 15

2. Number of people who have cars: 2

3. Number of people who can fit in each car: 5

4. Number of motorcycles available: 2

5. Number of people who can fit in each motorcycle: 2

Now, let's see if we can accommodate everyone:

1. Car 1: 2 people who want to go to the restaurant (driver and passenger)

2. Car 2: 5 people who want to go to the restaurant (driver and 4 passengers)

3. Motorcycle 1: 2 people who want to go to the restaurant (driver and passenger)

4. Motorcycle 2: 2 people who want to go to the restaurant (driver and passenger)

Total number of people who can get to the restaurant: 12 (6 from Car 1, 5 from Car 2, and 1 from Motorcycle 1)

Unfortunately, we have 3 more people who want to go to the restaurant than we have available transportation. So, no, we cannot all get to the restaurant by car or motorcycle.

当然,让我们一步一步来看看是否能让每个人都到达餐厅:

1. 想去餐厅的人数:15人

2. 拥有汽车的人数:2人

3. 每辆汽车可容纳的人数:5人

4. 可用摩托车数量:2辆

5. 每辆摩托车可容纳的人数:2人

现在,让我们看看是否能容纳所有人:

1. 汽车1:2名想去餐厅的人(司机和乘客)

2. 汽车2:5名想去餐厅的人(司机和4名乘客)

3. 摩托车1:2名想去餐厅的人(司机和乘客)

4. 摩托车2:2名想去餐厅的人(司机和乘客)

能到达餐厅的总人数:12人(汽车1有6人,汽车2有5人,摩托车1有1人)

不幸的是,我们有3个想去餐厅的人比我们可用的交通工具多。所以,不,我们无法所有人都乘汽车或摩托车去餐厅。

- Provide the model with additional instructions. (向模型提供额外的说明。)

prompt = """

15 of us want to go to a restaurant.

Two of them have cars

Each car can seat 5 people.

Two of us have motorcycles.

Each motorcycle can fit 2 people.

Can we all get to the restaurant by car or motorcycle?

Think step by step.

Explain each intermediate step.

Only when you are done with all your steps,

provide the answer based on your intermediate steps.

"""

response = llama(prompt)

print(response)

Of course! Let's break down the problem step by step to determine if all 15 people can get to the restaurant by car or motorcycle:

Step 1: Identify the number of people who can be accommodated by each car:

* Two cars are available, and each car can seat 5 people.

* Therefore, a total of 10 people can be accommodated by the two cars.

Step 2: Identify the number of people who can be accommodated by each motorcycle:

* Two motorcycles are available, and each motorcycle can fit 2 people.

* Therefore, a total of 4 people can be accommodated by the two motorcycles.

Step 3: Determine the total number of people who can be accommodated for the trip:

* Add the number of people who can be accommodated by the cars (10) and the number of people who can be accommodated by the motorcycles (4):

10 + 4 = 14

Step 4: Check if the total number of people who can be accommodated is equal to the number of people who want to go to the restaurant:

* 14 is less than 15 (the number of people who want to go to the restaurant)

Therefore, it is not possible to accommodate all 15 people by car or motorcycle.

Answer: No, it is not possible to take all 15 people to the restaurant by car or motorcycle.

逐步思考。

解释每个中间步骤。

只有当您完成所有步骤后,根据您的中间步骤提供答案。

当然!让我们一步一步解决问题,确定是否所有15个人都能乘汽车或摩托车到达餐厅:

第一步:确定每辆汽车可容纳的人数:

- 有两辆汽车可用,每辆汽车可容纳5人。

- 因此,两辆汽车总共可以容纳10人。

第二步:确定每辆摩托车可容纳的人数:

- 有两辆摩托车可用,每辆摩托车可以坐2个人。

- 因此,两辆摩托车总共可以容纳4人。

第三步:确定出行可容纳的总人数:

- 将汽车可容纳的人数(10人)与摩托车可容纳的人数(4人)相加:

10 + 4 = 14

第四步:检查可容纳的总人数是否等于想去餐厅的人数:

- 14小于15(想去餐厅的人数)

因此,不可能让所有15个人都乘汽车或摩托车去餐厅。

答案:不,无法让所有15个人都乘汽车或摩托车去餐厅。

- The order of instructions matters! (指令的顺序很重要!)

- Ask the model to “answer first” and “explain later” to see how the output changes. (要求模型“先回答”,“后解释”,看看输出如何变化。)

prompt = """

15 of us want to go to a restaurant.

Two of them have cars

Each car can seat 5 people.

Two of us have motorcycles.

Each motorcycle can fit 2 people.

Can we all get to the restaurant by car or motorcycle?

Think step by step.

Provide the answer as a single yes/no answer first.

Then explain each intermediate step.

"""

response = llama(prompt)

print(response)

我们所有人都可以乘车或摩托车去餐馆吗?

逐步思考。

首先提供一个单一的是/否答案。

然后解释每个中间步骤。

是的,所有15个人都可以乘汽车或摩托车到达餐厅。具体方法如下:

第一步:拥有汽车的两个人可以容纳10个人(每辆汽车可容纳5人 x 2辆汽车)。

第二步:拥有摩托车的两个人可以容纳4个人(每辆摩托车可容纳2人 x 2辆摩托车)。

第三步:将汽车和摩托车的乘客人数相加:10 + 4 = 14人可以到达餐厅。

因此,答案是是,所有15个人都可以乘汽车或摩托车到达餐厅。

- Since LLMs predict their answer one token at a time, the best practice is to ask them to think step by step, and then only provide the answer after they have explained their reasoning. (由于LLMs一次一个标记地预测答案,最佳实践是要求它们逐步思考,然后在它们解释推理后才提供答案。)

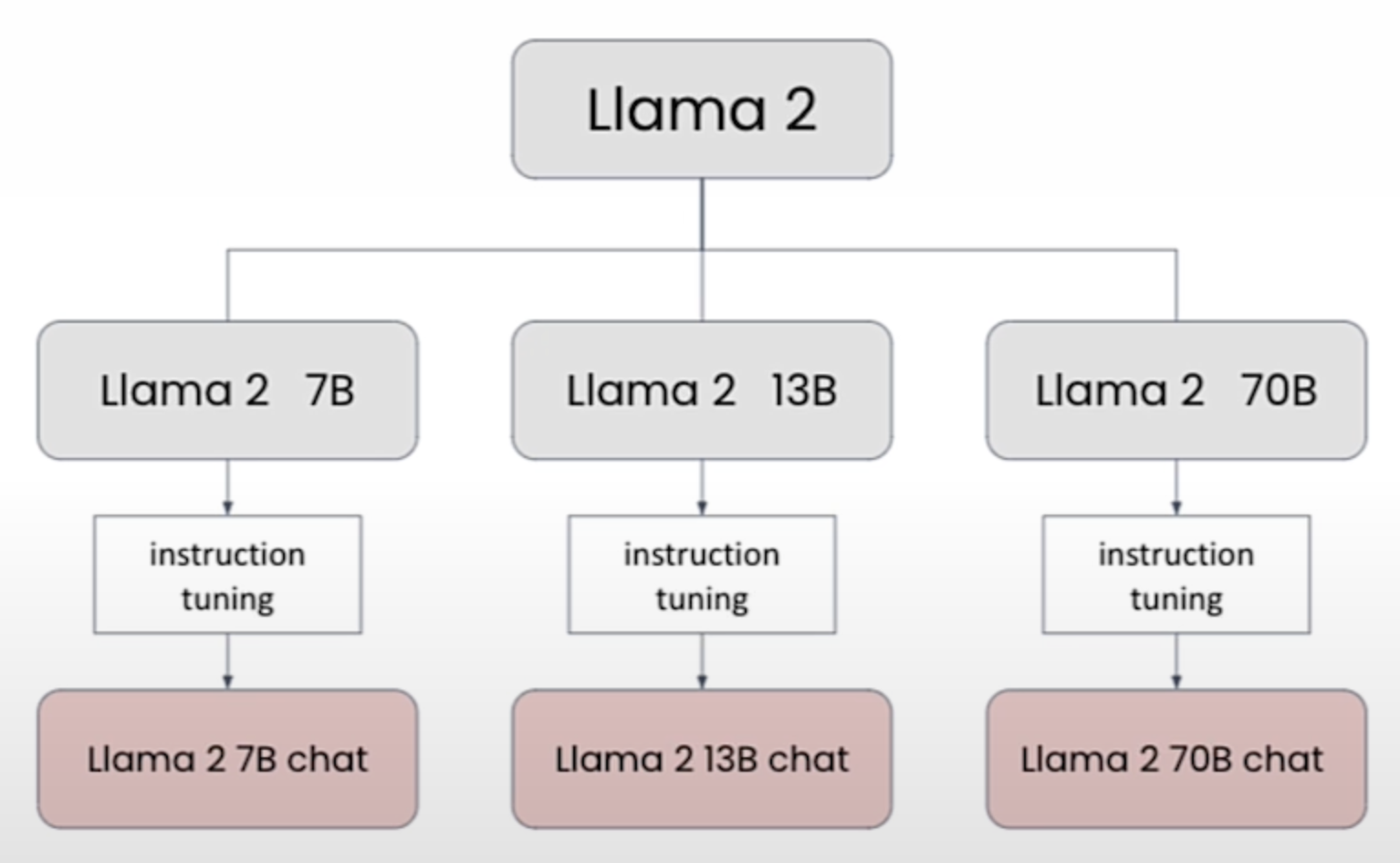

Comparing Different Llama 2 models (比较不同的 Llama 2 模型)

Comparison of Llama-2 models (Llama-2 模型的比较)

| Llama 2 Model | Size (weights) | Best for* |

|---|---|---|

| 7B | 13.5 GB | Simpler tasks(简单任务) |

| 13B | 26 GB | Ordinary tasks(普通任务) |

| 70B | 138 GB | Sophisticated reasoning(复杂推理) |

General performance (一般性能)

| Llama 2 Model | Commonsense Reasoning (常识推理) | World knowledge (世界知识) | Reading comprehension (阅读理解) |

|---|---|---|---|

| 7B | 63.9 | 48.9 | 61.3 |

| 13B | 66.9 | 55.4 | 65.8 |

| 70B | 71.9 | 63.6 | 69.4 |

Safety / alignment performance (安全性/对齐性性能)

| Llama 2 Model | TruthfulQA (真实性) | Toxigen (毒性) |

|---|---|---|

| 7B | 33.3 | 21.3 |

| 13B | 41.9 | 26.1 |

| 70B | 50.2 | 24.6 |

| 7B Chat | 57.0 | 0 |

| 13B Chat | 62.2 | 0 |

| 70B Chat | 64.1 | 0 |

Task 1: Sentiment Classification(情感分类)

- Compare the models on few-shot prompt sentiment classification.(在少样本提示情感分类上比较模型。)

- You are asking the model to return a one word response.(你要求模型返回一个单词的响应。)

prompt = '''

Message: Hi Amit, thanks for the thoughtful birthday card!

Sentiment: Positive

Message: Hi Dad, you're 20 minutes late to my piano recital!

Sentiment: Negative

Message: Can't wait to order pizza for dinner tonight!

Sentiment: ?

Give a one word response.

'''

- llama-2-7b-chat

Hungry - llama-2-70b-chat

Positive

中文:

prompt = '''

消息:嗨,阿米特,谢谢你那份富有思想的生日卡!

情感:积极

消息:嗨,爸爸,你迟到了20分钟来参加我的钢琴演奏会!

情感:消极

消息:今晚等不及要点披萨吃晚饭!

情感:?

给出一个词的回答。

'''

- qwen-1.8b-chat

积极 - qwen-7b-chat

兴奋 - qwen-14b-chat

积极 - qwen-72b-chat

积极 - chatglm3-6b

失落 - baichuan2-7b-chat

期待

Task 2: Summarization(摘要)

- Compare the models on summarization task.(在摘要任务上比较模型。)

- This is the same “email” as the one you used previously in the course.(这是你在课程中之前使用过的同一封“电子邮件”。)

email = """

Dear Amit,

An increasing variety of large language models (LLMs) are open source, or close to it. The proliferation of models with relatively permissive licenses gives developers more options for building applications.

Here are some different ways to build applications based on LLMs, in increasing order of cost/complexity:

Prompting. Giving a pretrained LLM instructions lets you build a prototype in minutes or hours without a training set. Earlier this year, I saw a lot of people start experimenting with prompting, and that momentum continues unabated. Several of our short courses teach best practices for this approach.

One-shot or few-shot prompting. In addition to a prompt, giving the LLM a handful of examples of how to carry out a task — the input and the desired output — sometimes yields better results.

Fine-tuning. An LLM that has been pretrained on a lot of text can be fine-tuned to your task by training it further on a small dataset of your own. The tools for fine-tuning are maturing, making it accessible to more developers.

Pretraining. Pretraining your own LLM from scratch takes a lot of resources, so very few teams do it. In addition to general-purpose models pretrained on diverse topics, this approach has led to specialized models like BloombergGPT, which knows about finance, and Med-PaLM 2, which is focused on medicine.

For most teams, I recommend starting with prompting, since that allows you to get an application working quickly. If you’re unsatisfied with the quality of the output, ease into the more complex techniques gradually. Start one-shot or few-shot prompting with a handful of examples. If that doesn’t work well enough, perhaps use RAG (retrieval augmented generation) to further improve prompts with key information the LLM needs to generate high-quality outputs. If that still doesn’t deliver the performance you want, then try fine-tuning — but this represents a significantly greater level of complexity and may require hundreds or thousands more examples. To gain an in-depth understanding of these options, I highly recommend the course Generative AI with Large Language Models, created by AWS and DeepLearning.AI.

(Fun fact: A member of the DeepLearning.AI team has been trying to fine-tune Llama-2-7B to sound like me. I wonder if my job is at risk? 😜)

Additional complexity arises if you want to move to fine-tuning after prompting a proprietary model, such as GPT-4, that’s not available for fine-tuning. Is fine-tuning a much smaller model likely to yield superior results than prompting a larger, more capable model? The answer often depends on your application. If your goal is to change the style of an LLM’s output, then fine-tuning a smaller model can work well. However, if your application has been prompting GPT-4 to perform complex reasoning — in which GPT-4 surpasses current open models — it can be difficult to fine-tune a smaller model to deliver superior results.

Beyond choosing a development approach, it’s also necessary to choose a specific model. Smaller models require less processing power and work well for many applications, but larger models tend to have more knowledge about the world and better reasoning ability. I’ll talk about how to make this choice in a future letter.

Keep learning!

Andrew

"""

prompt = f"""

Summarize this email and extract some key points.(总结这封电子邮件并提取一些关键点。)

What did the author say about llama models?(作者对llama模型有什么看法?)

{email}

"""

Dear Amit,

An increasing variety of large language models (LLMs) are open source, or close to it. The proliferation of models with relatively permissive licenses gives developers more options for building applications.

Here are some different ways to build applications based on LLMs, in increasing order of cost/complexity:

Prompting. Giving a pretrained LLM instructions lets you build a prototype in minutes or hours without a training set. Earlier this year, I saw a lot of people start experimenting with prompting, and that momentum continues unabated. Several of our short courses teach best practices for this approach.

One-shot or few-shot prompting. In addition to a prompt, giving the LLM a handful of examples of how to carry out a task — the input and the desired output — sometimes yields better results.

Fine-tuning. An LLM that has been pretrained on a lot of text can be fine-tuned to your task by training it further on a small dataset of your own. The tools for fine-tuning are maturing, making it accessible to more developers.

Pretraining. Pretraining your own LLM from scratch takes a lot of resources, so very few teams do it. In addition to general-purpose models pretrained on diverse topics, this approach has led to specialized models like BloombergGPT, which knows about finance, and Med-PaLM 2, which is focused on medicine.

For most teams, I recommend starting with prompting, since that allows you to get an application working quickly. If you’re unsatisfied with the quality of the output, ease into the more complex techniques gradually. Start one-shot or few-shot prompting with a handful of examples. If that doesn’t work well enough, perhaps use RAG (retrieval augmented generation) to further improve prompts with key information the LLM needs to generate high-quality outputs. If that still doesn’t deliver the performance you want, then try fine-tuning — but this represents a significantly greater level of complexity and may require hundreds or thousands more examples. To gain an in-depth understanding of these options, I highly recommend the course Generative AI with Large Language Models, created by AWS and DeepLearning.AI.

(Fun fact: A member of the DeepLearning.AI team has been trying to fine-tune Llama-2-7B to sound like me. I wonder if my job is at risk? 😜)

Additional complexity arises if you want to move to fine-tuning after prompting a proprietary model, such as GPT-4, that’s not available for fine-tuning. Is fine-tuning a much smaller model likely to yield superior results than prompting a larger, more capable model? The answer often depends on your application. If your goal is to change the style of an LLM’s output, then fine-tuning a smaller model can work well. However, if your application has been prompting GPT-4 to perform complex reasoning — in which GPT-4 surpasses current open models — it can be difficult to fine-tune a smaller model to deliver superior results.

Beyond choosing a development approach, it’s also necessary to choose a specific model. Smaller models require less processing power and work well for many applications, but larger models tend to have more knowledge about the world and better reasoning ability. I’ll talk about how to make this choice in a future letter.

Keep learning!

Andrew

中文:

亲爱的阿米特,

目前,越来越多的大型语言模型(LLMs)是开源的,或者接近开源。具有相对宽松许可证的模型的扩散为开发者提供了更多的选择来构建应用程序。

以下是一些基于LLMs构建应用程序的不同方法,按照成本/复杂性递增的顺序排列:

提示。给预训练的LLM提供指令,可以让你在没有训练集的情况下在几分钟或几小时内构建一个原型。今年早些时候,我看到很多人开始尝试使用提示,这种势头仍在继续。我们的一些短期课程教授这种方法的最佳实践。

一次性或少次提示。除了提示之外,给LLM提供少量完成任务的示例(输入和期望的输出)有时会得到更好的结果。

微调。一个在大量文本上预训练过的LLM可以通过在你自己的小数据集上进一步训练来进行微调。微调的工具正在成熟,使其对更多的开发者变得可访问。

预训练。从头开始预训练自己的LLM需要大量资源,所以很少有团队这样做。除了在多样化主题上预训练的通用模型外,这种方法还导致了像BloombergGPT这样了解金融的专业化模型,以及专注于医学的Med-PaLM 2。

对于大多数团队,我建议从提示开始,因为这可以让你快速地让应用程序工作起来。如果你对输出的质量不满意,可以逐渐尝试更复杂的技术。从一个少次提示开始,用少量示例。如果效果不够好,或许可以使用RAG(检索增强生成)来进一步改进提示,为LLM提供生成高质量输出所需的关键信息。如果这仍然不能提供你想要的性能,那么尝试微调——但这代表着显著更高的复杂性,可能需要数百或数千个更多的示例。为了深入理解这些选项,我强烈推荐由AWS和DeepLearning.AI创建的课程“生成式人工智能与大型语言模型”。

(趣事:DeepLearning.AI团队的一位成员一直在尝试微调Llama-2-7B,让它听起来像我。我想知道我是不是要失业了?😜)

如果你想在提示一个专有模型(如GPT-4)之后转向微调,这会增加额外的复杂性,而GPT-4并不适用于微调。微调一个更小的模型是否可能比提示一个更大、更有能力的模型得到更好的结果?答案往往取决于你的应用程序。如果你的目标是改变LLM输出的风格,那么微调一个更小的模型可以很好地工作。然而,如果你的应用程序一直在提示GPT-4进行复杂推理——在这方面GPT-4超过了当前的开放模型——那么微调一个更小的模型以提供更好的结果可能会很困难。

除了选择开发方法外,还需要选择一个特定的模型。更小的模型需要的处理能力较小,对许多应用程序来说效果很好,但更大的模型往往对世界有更多的了解和更好的推理能力。我将在未来的信件中讨论如何做出这个选择。

继续学习!

安德鲁

- llama-2-7b-chat ``` The author discusses the proliferation of large language models (LLMs) and the various ways to build applications using them, ranging from prompting to fine-tuning. Key points include:

- LLMs are becoming more open source, giving developers more options for building applications.

- Different approaches to building applications include prompting, one-shot or few-shot prompting, fine-tuning, and pretraining.

- The author recommends starting with prompting for most teams, as it allows for quick development, and gradually moving to more complex techniques if needed.

- Fine-tuning a smaller model can yield superior results than prompting a larger, more capable model in some cases, depending on the application.

- Choosing a specific model also requires consideration, as smaller models require less processing power but may not have as much knowledge about the world or reasoning ability as larger models.

- The author plans to discuss how to make this choice in a future letter.

Regarding llama models, the author mentions that a member of the DeepLearning.AI team has been trying to fine-tune a model called Llama-2-7B to sound like them, but the author does not provide any further information or insights about llama models.

作者讨论了大型语言模型(LLMs)的扩散以及使用它们构建应用程序的各种方法,包括提示到微调。关键点包括:

- LLMs正变得更加开源,为开发者构建应用程序提供了更多选择。

- 构建应用程序的不同方法包括提示、一次性或少次提示、微调和预训练。

- 对于大多数团队,作者建议从提示开始,因为它允许快速开发,并在需要时逐渐转向更复杂的技术。

- 在某些情况下,微调一个更小的模型可能比提示一个更大、更有能力的模型产生更好的结果,这取决于应用程序。

- 选择特定模型也需要考虑,因为更小的模型需要的处理能力较小,但可能没有更大模型对世界的知识或推理能力那么丰富。

- 作者计划在未来的信件中讨论如何做出这个选择。 关于美洲驼模型,作者提到DeepLearning.AI团队的一名成员一直在尝试微调一个名为Llama-2-7B的模型,使其听起来像他们自己,但作者没有提供关于美洲驼模型的进一步信息或见解。 ```

- llama-2-13b-chat ``` 当然可以!这是邮件内容的总结和一些关键点:

总结: 作者讨论了使用大型语言模型(LLMs)构建应用程序的不同方法,从提示到微调,并提供了何时使用每种方法的建议。他们还讨论了使用较小或较大模型之间的权衡,以及为应用程序选择正确模型的重要性。

关键点:

- 提示:给预训练的LLM下达指令,快速构建原型,无需训练集。

- 一次性或少次提示:为LLM提供少量示例以获得更好的结果。

- 微调:针对特定任务在小数据集上进一步训练LLM。

- 预训练:从零开始训练LLM,但需要大量资源。

- 选择开发方法:根据应用程序的需要,作者建议从提示开始,并在需要时逐渐采用更复杂的技术。

- 选择特定模型:较小的模型需要的处理能力较小,但可能没有那么多知识,而较大的模型具有更好的推理能力,但需要更多资源。

- 微调较小的模型:与提示一个更大、更有能力的模型相比,可能不会得到更好的结果,特别是在复杂推理任务中。

- 作者将在未来的信件中讨论如何为应用程序选择正确的模型。 ```

- llama-2-70b-chat ``` 邮件的作者安德鲁讨论了使用大型语言模型(LLMs)构建应用程序的各种方法,包括提示、一次性或少次提示、微调和预训练。他建议从提示开始,必要时逐渐采用更复杂的技术。他还提到了微调像GPT-4这样的专有模型的挑战,并指出较小的模型并不总是能提供更好的结果。

作者还提到了一个“趣事”,即DeepLearning.AI团队的一名成员正在尝试微调Llama-2-7B模型,使其听起来像他,这表明作者可能正在探索创建个性化AI模型的可能性。

邮件中的一些关键点包括:

- 使用LLMs构建应用程序有几种方法,每种方法的成本和复杂性都在增加。

- 提示是一种快速简便的构建原型的方法,对于大多数团队来说是一个很好的起点。

- 微调是一种更复杂的方法,需要你自己的小数据集,但它对更多的开发者来说是可访问的。

- 从头开始预训练你自己的LLM是资源密集型的,只推荐用于专门的模型。

- 选择正确的模型取决于你的应用程序,较小的模型需要的处理能力较小,但较大的模型拥有更多的知识和更好的推理能力。

- 作者将在未来的信件中讨论如何选择特定的模型。 ```

中文

提示

总结这封电子邮件并提取一些关键点。

作者对llama模型有什么看法?

亲爱的阿米特,

目前,越来越多的大型语言模型(LLMs)是开源的,或者接近开源。具有相对宽松许可证的模型的扩散为开发者提供了更多的选择来构建应用程序。

以下是一些基于LLMs构建应用程序的不同方法,按照成本/复杂性递增的顺序排列:

提示。给预训练的LLM提供指令,可以让你在没有训练集的情况下在几分钟或几小时内构建一个原型。今年早些时候,我看到很多人开始尝试使用提示,这种势头仍在继续。我们的一些短期课程教授这种方法的最佳实践。

一次性或少次提示。除了提示之外,给LLM提供少量完成任务的示例(输入和期望的输出)有时会得到更好的结果。

微调。一个在大量文本上预训练过的LLM可以通过在你自己的小数据集上进一步训练来进行微调。微调的工具正在成熟,使其对更多的开发者变得可访问。

预训练。从头开始预训练自己的LLM需要大量资源,所以很少有团队这样做。除了在多样化主题上预训练的通用模型外,这种方法还导致了像BloombergGPT这样了解金融的专业化模型,以及专注于医学的Med-PaLM 2。

对于大多数团队,我建议从提示开始,因为这可以让你快速地让应用程序工作起来。如果你对输出的质量不满意,可以逐渐尝试更复杂的技术。从一个少次提示开始,用少量示例。如果效果不够好,或许可以使用RAG(检索增强生成)来进一步改进提示,为LLM提供生成高质量输出所需的关键信息。如果这仍然不能提供你想要的性能,那么尝试微调——但这代表着显著更高的复杂性,可能需要数百或数千个更多的示例。为了深入理解这些选项,我强烈推荐由AWS和DeepLearning.AI创建的课程“生成式人工智能与大型语言模型”。

(趣事:DeepLearning.AI团队的一位成员一直在尝试微调Llama-2-7B,让它听起来像我。我想知道我是不是要失业了?😜)

如果你想在提示一个专有模型(如GPT-4)之后转向微调,这会增加额外的复杂性,而GPT-4并不适用于微调。微调一个更小的模型是否可能比提示一个更大、更有能力的模型得到更好的结果?答案往往取决于你的应用程序。如果你的目标是改变LLM输出的风格,那么微调一个更小的模型可以很好地工作。然而,如果你的应用程序一直在提示GPT-4进行复杂推理——在这方面GPT-4超过了当前的开放模型——那么微调一个更小的模型以提供更好的结果可能会很困难。

除了选择开发方法外,还需要选择一个特定的模型。更小的模型需要的处理能力较小,对许多应用程序来说效果很好,但更大的模型往往对世界有更多的了解和更好的推理能力。我将在未来的信件中讨论如何做出这个选择。

继续学习!

安德鲁

响应

- ChatGPT-3.5

这封电子邮件总结了使用大型语言模型(LLMs)构建应用程序的不同方法,按照成本/复杂性递增的顺序排列。作者提到了以下几种方法:

- 提示法:给预训练的LLM提供指令,可以快速构建原型。

- 一次性或少次提示法:除了提示之外,给LLM提供少量完成任务的示例,有时会得到更好的结果。

- 微调法:在大量文本上预训练过的LLM可以通过在你自己的小数据集上进一步训练来进行微调。

- 预训练法:从头开始预训练自己的LLM,需要大量资源。

作者建议大多数团队从提示开始,因为这可以让应用程序快速地工作起来。如果对输出的质量不满意,可以逐渐尝试更复杂的技术。作者还提到了微调一个更小的模型可能比提示一个更大、更有能力的模型得到更好结果的可能性,但这取决于应用程序的具体需求。此外,选择特定的模型也是一个重要考虑因素,因为更大的模型通常具有更好的推理能力,但需要更多的计算资源。

作者还插入了一个有趣的趣事,提到DeepLearning.AI团队的一位成员正在尝试微调Llama-2-7B模型,让它听起来像作者自己。这体现了对LLMs的一种有趣的应用。

总的来说,作者对LLMs持积极的态度,并提供了关于如何选择开发方法和模型的实用建议。

- kimi

电子邮件总结:

作者讨论了大型语言模型(LLMs)的开源趋势以及它们为开发者提供的各种构建应用程序的方法。

他介绍了四种方法:提示、一次性或少次提示、微调和从头开始的预训练,并建议大多数团队从简单的提示开始。

作者提到了微调专有模型的挑战,并且指出选择正确的模型大小取决于应用程序的需求。

他还提到了一个趣事,即DeepLearning.AI团队正在尝试让Llama-2-7B模型模仿他的风格。

作者对llama模型的看法:

作者没有特别提到对“llama”模型的看法,而是更广泛地讨论了大型语言模型的应用和开发方法。

他提到了一些专门的模型,如BloombergGPT和Med-PaLM 2,这些模型是针对特定领域预训练的。

作者强调了选择适合的模型和开发方法的重要性,这取决于应用程序的具体需求和目标。

- 通义千问

电子邮件总结与关键点

该电子邮件探讨了在构建应用程序时利用大型语言模型(LLMs)的不同方法,以及选择和应用LLMs的考虑因素。以下是关键观点和信息摘要:

- LLMs的开放趋势与影响:

- 大型语言模型日益开源或接近开源,使得开发者有更多选择来构建应用程序。

- 拥有宽松许可证的模型普及,促进了LLMs在开发中的应用。

- 基于LLMs构建应用程序的方法(按成本/复杂性递增排序):

- 提示(Prompting):

- 使用预训练LLM,通过提供指令快速构建原型,无需训练集,几分钟到几小时内即可完成。

- 提示法在近期受到广泛尝试,并有短期课程教授最佳实践。

- 一次性或少次提示(Few-shot Prompting):

- 在提示基础上,提供少量任务示例(输入与期望输出),以提升模型表现。

- 微调(Fine-tuning):

- 针对具体任务,在预训练LLM基础上利用小数据集进行进一步训练。

- 微调工具日益成熟,降低了准入门槛,适用于对输出质量有更高要求的情况。

- 预训练(Pre-training):

- 从零开始训练专属LLM,资源需求极高,通常仅限于专业团队。

- 用于生成专业化模型,如专注金融的BloombergGPT或医学领域的Med-PaLM 2。

- 提示(Prompting):

- 建议的开发路径:

- 对于多数团队,建议从提示开始,以便快速实现应用功能。若输出质量不满足需求,逐步尝试更复杂技术,如少次提示、结合RAG(检索增强生成)或微调。

- 微调与模型选择考量:

- 微调专有模型(如GPT-4)增加了额外复杂性,且GPT-4不适用于微调。

- 根据应用目标,微调小型模型可能优于提示大型模型,特别是在改变输出风格方面;但对于需要复杂推理的任务,小型模型可能难以匹敌大型模型。

- 模型大小的选择:

- 更小模型对硬件要求较低,适用于许多应用,但大型模型具备更丰富的知识和更强的推理能力。

- 作者计划在后续通信中进一步讨论如何权衡选择不同大小的模型。

- 趣事:

- DeepLearning.AI团队成员尝试微调Llama-2-7B,使其模仿作者的声音,引发关于LLMs可能替代人类工作的幽默联想。

综上所述,邮件作者认为LLMs为应用程序开发提供了多样化的构建途径,从简单的提示到复杂的微调。开发者应根据项目需求、资源限制及预期输出质量,合理选择适用的方法与模型。同时,邮件强调了大型模型在知识深度和推理能力上的优势,以及未来关于模型大小选择的进一步讨论。

- 文心一言 ``` 这封电子邮件主要讨论了大型语言模型(LLMs)在构建应用程序中的应用,以及选择和使用这些模型的不同方法。作者认为,随着越来越多的开源LLMs出现,开发者有了更多的选择来构建应用程序。

关于llama模型,作者提到DeepLearning.AI团队的一位成员正在尝试微调Llama-2-7B模型,并调侃说这可能意味着作者的失业风险增加。然而,这只是作为趣事提及,并没有直接表达作者对llama模型的看法。

从邮件中,我们可以提取以下关于LLMs的关键点:

LLMs的开源和相对宽松的许可证为开发者提供了更多选择。 构建基于LLMs的应用程序的不同方法包括提示、一次性或少次提示、微调和预训练,这些方法按成本和复杂性递增的顺序排列。 对于大多数团队,作者建议从提示开始构建应用程序,并逐步尝试更复杂的技术。 微调一个LLM可以带来更好的性能,但也会增加复杂性,并可能需要更多的示例。 选择特定的LLM模型时,需要考虑模型的大小和所需的处理能力,以及模型对世界的了解和推理能力。 综上所述,虽然邮件中提到了llama模型的一个具体实例(即微调尝试),但并没有直接表达对llama模型本身的看法。邮件主要关注的是LLMs在构建应用程序中的应用方法和选择策略。

- 百川

在这封电子邮件中,作者安德鲁讨论了大型语言模型(LLMs)的开源趋势以及基于LLMs构建应用程序的不同方法。他提到了四种主要的方法:提示、一次性或少次提示、微调和预训练,并根据成本和复杂性对这些方法进行了排序。

安德鲁建议大多数团队从提示开始,因为它可以快速地让应用程序工作起来。如果对输出的质量不满意,可以逐渐尝试更复杂的技术,如一次性或少次提示、RAG(检索增强生成)和微调。他还提到,虽然从头开始预训练自己的LLM需要大量资源,但这已经导致了专业化模型的出现,如BloombergGPT和Med-PaLM 2。

此外,安德鲁还讨论了在选择开发方法和特定模型时需要考虑的因素。他指出,更小的模型需要的处理能力较小,对许多应用程序来说效果很好,但更大的模型往往对世界有更多的了解和更好的推理能力。

最后,安德鲁提到了DeepLearning.AI团队的一名成员正在尝试微调Llama-2-7B,让它听起来像他,这让他担心自己是否会失业。

- deepseek.ai

电子邮件的作者,安德鲁,讨论了基于大型语言模型(LLMs)构建应用程序的不同方法,并提供了建议。他建议大多数团队从提示开始,因为这是一种快速且相对简单的方法。如果需要更好的输出质量,可以逐步尝试更复杂的技术,如少次提示、RAG(检索增强生成)、微调等。作者还提到了微调Llama-2-7B模型以模仿他的声音的有趣尝试。

关于微调与提示的选择,作者指出这取决于应用程序的具体需求。如果目标是改变输出风格,微调一个小模型可能更合适;但如果需要复杂推理,提示一个更大、更有能力的模型可能更有效。作者还提到了未来将讨论如何选择特定模型的问题。

作者对LLM的看法是积极的,认为它们为开发者提供了构建应用程序的多种选择,并且随着工具的成熟,这些技术对更多开发者变得可访问。

- 跃问

总结

这封电子邮件主要讨论了基于大型语言模型(LLMs)构建应用程序的不同方法,并提供了一些建议。

关键点

- 基于LLMs构建应用程序的方法包括:提示、一次性或少次提示、微调和预训练。

- 对于大多数团队,建议从提示开始,如果对输出质量不满意,可以逐渐尝试更复杂的技术。

- 微调一个更小的模型是否比提示一个更大、更有能力的模型得到更好的结果,取决于具体的应用程序。

- 除了开发方法,选择一个特定的模型也很重要,需要考虑处理能力和对世界的了解等因素。

- 作者提到了一个趣事,DeepLearning.AI团队的一位成员一直在尝试微调Llama-2-7B,让它听起来像作者自己。

对Llama模型的看法

作者在邮件中提到了Llama-2-7B模型,并表示DeepLearning.AI团队的一位成员正在尝试微调该模型,以使其听起来像作者自己。这表明作者对Llama模型有一定的了解和关注,并且认为它具有潜力用于微调和风格转换等任务。

#### Model-Graded Evaluation: Summarization(模型评分评估:摘要)

- Interestingly, you can ask a LLM to evaluate the responses of other LLMs.(有趣的是,你可以要求LLM评估其他LLM的响应。)

- This is known as **Model-Graded Evaluation**.(这被称为模型评分评估。)

- Create a `prompt` that will evaluate these three responses using 70B parameter chat model (`llama-2-70b-chat`).

- In the `prompt`, provide the "email", "name of the models", and the "summary" generated by each model.

**提示**

```python

prompt = f"""

Given the original text denoted by `email`

and the name of several models: `model:<name of model>

as well as the summary generated by that model: `summary`

Provide an evaluation of each model's summary:

- Does it summarize the original text well?

- Does it follow the instructions of the prompt?

- Are there any other interesting characteristics of the model's output?

Then compare the models based on their evaluation \

and recommend the models that perform the best.

email: ```{email}`

model: llama-2-7b-chat

summary: {response_7b}

model: llama-2-13b-chat

summary: {response_13b}

model: llama-2-70b-chat

summary: {response_70b}

"""

response_eval = llama(prompt,

model="togethercomputer/llama-2-70b-chat")

print(response_eval)

中文:

prompt = f"""

根据用`email`表示的原始文本,以及几个模型的名称:`model:<model name>`,以及该模型生成的摘要:`summary`。

提供对每个模型摘要的评估:

- 它是否很好地总结了原始文本?

- 它是否遵循了提示的指令?

- 模型输出是否有任何其他有趣的特征?

然后根据评估比较模型,并推荐表现最好的模型。

email: ```{email}```

model: llama-2-7b-chat

summary: {response_7b}

model: llama-2-13b-chat

summary: {response_13b}

model: llama-2-70b-chat

summary: {response_70b}

"""

response_eval = llama(prompt,

model="togethercomputer/llama-2-70b-chat")

print(response_eval)

响应

Based on the summaries provided, it seems that all three models (llama-2-7b-chat, llama-2-13b-chat, and llama-2-70b-chat) were able to capture the main points of the email. However, there are some differences in the way the information is presented and the level of detail provided.

Llama-2-7b-chat's summary is the shortest and most concise, focusing on the key points of the email. It does not provide any additional information or insights beyond what is mentioned in the email.

Llama-2-13b-chat's summary is slightly longer and provides more context, including the author's recommendations for choosing a development approach and the trade-offs between using smaller or larger models. It also mentions the "fun fact" about the DeepLearning.AI team trying to fine-tune Llama-2-7B.

Llama-2-70b-chat's summary is the longest and most detailed, providing a comprehensive overview of the email's content. It includes all the key points mentioned in the other two summaries and adds additional information about the challenges of fine-tuning a proprietary model like GPT-4.

Overall, all three models seem to have performed well in summarizing the email, but llama-2-70b-chat's summary is the most comprehensive and detailed.

中文:

根据提供的摘要,看起来所有三个模型(llama-2-7b-chat、llama-2-13b-chat和llama-2-70b-chat)都能够捕捉到邮件的主要内容。然而,在信息呈现方式和提供的细节程度上存在一些差异。

llama-2-7b-chat的摘要最短,最简洁,专注于邮件的关键点。它没有提供邮件中未提及的任何额外信息或见解。

llama-2-13b-chat的摘要稍长一些,并提供了更多背景信息,包括作者关于选择开发方法的建议以及使用较小或较大模型之间的权衡。它还提到了DeepLearning.AI团队尝试微调Llama-2-7B的“趣事”。

llama-2-70b-chat的摘要最长,最详细,提供了邮件内容的全面概述。它包括其他两个摘要中提到的所有关键点,并增加了有关微调像GPT-4这样的专有模型所面临的挑战的额外信息。

总体而言,所有三个模型在摘要邮件方面都表现良好,但llama-2-70b-chat的摘要最为全面和详细。

使用 Qwen LLM 模型进行评估:

总结这封电子邮件并提取一些关键点。

作者对llama模型有什么看法?

亲爱的阿米特,

目前,越来越多的大型语言模型(LLMs)是开源的,或者接近开源。具有相对宽松许可证的模型的扩散为开发者提供了更多的选择来构建应用程序。

以下是一些基于LLMs构建应用程序的不同方法,按照成本/复杂性递增的顺序排列:

提示。给预训练的LLM提供指令,可以让你在没有训练集的情况下在几分钟或几小时内构建一个原型。今年早些时候,我看到很多人开始尝试使用提示,这种势头仍在继续。我们的一些短期课程教授这种方法的最佳实践。 一次性或少次提示。除了提示之外,给LLM提供少量完成任务的示例(输入和期望的输出)有时会得到更好的结果。 微调。一个在大量文本上预训练过的LLM可以通过在你自己的小数据集上进一步训练来进行微调。微调的工具正在成熟,使其对更多的开发者变得可访问。 预训练。从头开始预训练自己的LLM需要大量资源,所以很少有团队这样做。除了在多样化主题上预训练的通用模型外,这种方法还导致了像BloombergGPT这样了解金融的专业化模型,以及专注于医学的Med-PaLM 2。 对于大多数团队,我建议从提示开始,因为这可以让你快速地让应用程序工作起来。如果你对输出的质量不满意,可以逐渐尝试更复杂的技术。从一个少次提示开始,用少量示例。如果效果不够好,或许可以使用RAG(检索增强生成)来进一步改进提示,为LLM提供生成高质量输出所需的关键信息。如果这仍然不能提供你想要的性能,那么尝试微调——但这代表着显著更高的复杂性,可能需要数百或数千个更多的示例。为了深入理解这些选项,我强烈推荐由AWS和DeepLearning.AI创建的课程“生成式人工智能与大型语言模型”。

(趣事:DeepLearning.AI团队的一位成员一直在尝试微调Llama-2-7B,让它听起来像我。我想知道我是不是要失业了?😜)

如果你想在提示一个专有模型(如GPT-4)之后转向微调,这会增加额外的复杂性,而GPT-4并不适用于微调。微调一个更小的模型是否可能比提示一个更大、更有能力的模型得到更好的结果?答案往往取决于你的应用程序。如果你的目标是改变LLM输出的风格,那么微调一个更小的模型可以很好地工作。然而,如果你的应用程序一直在提示GPT-4进行复杂推理——在这方面GPT-4超过了当前的开放模型——那么微调一个更小的模型以提供更好的结果可能会很困难。

除了选择开发方法外,还需要选择一个特定的模型。更小的模型需要的处理能力较小,对许多应用程序来说效果很好,但更大的模型往往对世界有更多的了解和更好的推理能力。我将在未来的信件中讨论如何做出这个选择。

继续学习!

安德鲁

- qwen-1.8b-chat ``` 关键点:

给预训练的LLM提供指令可以快速构建原型。 提示法在开始时效果最好,但随着示例数量的增加,微调法逐渐比提示法更好。 从提示法开始可以获得较好的结果,但需要逐步尝试更复杂的技术。 微调可能需要更多的示例,尤其是当目标是改变LLM输出的风格时。 选择合适的模型是选择开发方法的重要因素,更小的模型需要处理能力较小,但更大的模型往往对世界有更多的了解和更好的推理能力。

- qwen-7b-chat

LLM模型可以用来构建应用程序,方法有提示、一次性或少次提示、微调和预训练。作者建议从提示开始,因为这可以让你快速地让应用程序工作起来,如果对输出的质量不满意,可以逐渐尝试更复杂的技术。微调代表着显著更高的复杂性,可能需要数百或数千个更多的示例。如果你想在提示一个专有模型之后转向微调,这会增加额外的复杂性,而GPT-4并不适用于微调。

- qwen-14b-chat

作者对LLM模型的看法是,越来越多的开源LLM模型为开发者提供了更多的选择来构建应用程序。作者建议从提示开始,因为这可以让你快速地让应用程序工作起来。如果效果不够好,可以逐渐尝试更复杂的技术,如微调。选择开发方法和模型时需要考虑处理能力、效果和推理能力等因素。

- qwen-72b-chat

这封电子邮件讨论了大型语言模型(LLMs)的使用和开发方法,作者安德鲁提出了一个从低成本到高成本/复杂性的技术梯度:

- 提示(Prompting):使用预训练的LLM,通过提供指令快速构建原型。

- 一次性或少次提示(One-shot or few-shot prompting):添加少量示例以改进结果。

- 微调(Fine-tuning):在小数据集上进一步训练预训练的LLM,需要更多资源和复杂性。

- 预训练(Pre-training):从头开始训练LLM,适合专业领域模型,如BloombergGPT和Med-PaLM 2。 建议大多数团队从提示开始,根据需要逐步尝试更复杂的技术。作者还提到了微调一个Llama-2-7B模型以模仿他的写作风格,并讨论了微调小型模型与提示大型模型之间的权衡,这取决于具体的应用场景。未来的通信将涉及选择合适模型的大小和能力。

关键点:

LLMs的开源趋势提供了更多开发选项。 提示是快速构建应用程序的简单方法,微调增加复杂性但可能提高性能。 选择模型大小与应用需求相关,更大的模型可能有更强的推理能力。 微调小型模型可能改变输出风格,但在复杂推理任务上可能不如大型模型。

- ChatGLM3-6B

关键点如下:

- 大型语言模型(LLMs)的开源性越来越多,为开发者提供了更多选择,构建应用程序。

- 根据成本/复杂性,有四种方法使用LLMs构建应用程序:提示、一次性或多次提示、微调和预训练。

- 提示:给预训练的LLM提供指令,可以在没有训练集的情况下快速构建原型。

- 微调:在LLM大量文本上预训练后,可以在自己的小数据集上进一步训练,微调工具正在成熟。

- 预训练:从头开始预训练自己的LLM需要大量资源,但可以得到通用模型或专业化模型。

- 推荐:对于大多数团队,建议从提示开始,然后尝试更复杂的技术,如一次性或多次提示、微调。

- 学习:选择开发方法和特定模型,需要考虑应用程序的需求和目标。

- 趣事:DeepLearning.AI团队尝试微调Llama-2-7B,使其像作者。 ```

- baichuan2-7b-chat

这封电子邮件主要讨论了基于大型语言模型(LLMs)构建应用程序的不同方法,以及如何选择合适的模型。作者建议从提示开始,因为这种方法可以快速构建原型,然后逐渐尝试更复杂的技术,如一次性或少次提示和微调。对于大多数团队,预训练的LLM可能不是最佳选择,因为它们需要大量资源。作者还讨论了微调LLM的复杂性,以及如何在提示、一次性或少次提示和微调之间进行选择。最后,作者提到在提示专有模型(如GPT-4)之后转向微调可能会增加复杂性。

根据用`email`表示的原始文本,以及几个模型的名称:`model:<model name>`,以及该模型生成的摘要:`summary`。

提供对每个模型摘要的评估:

- 它是否很好地总结了原始文本?

- 它是否遵循了提示的指令?

- 模型输出是否有任何其他有趣的特征?

然后根据评估比较模型,并推荐表现最好的模型。

email: ```亲爱的阿米特,

目前,越来越多的大型语言模型(LLMs)是开源的,或者接近开源。具有相对宽松许可证的模型的扩散为开发者提供了更多的选择来构建应用程序。

以下是一些基于LLMs构建应用程序的不同方法,按照成本/复杂性递增的顺序排列:

提示。给预训练的LLM提供指令,可以让你在没有训练集的情况下在几分钟或几小时内构建一个原型。今年早些时候,我看到很多人开始尝试使用提示,这种势头仍在继续。我们的一些短期课程教授这种方法的最佳实践。

一次性或少次提示。除了提示之外,给LLM提供少量完成任务的示例(输入和期望的输出)有时会得到更好的结果。

微调。一个在大量文本上预训练过的LLM可以通过在你自己的小数据集上进一步训练来进行微调。微调的工具正在成熟,使其对更多的开发者变得可访问。

预训练。从头开始预训练自己的LLM需要大量资源,所以很少有团队这样做。除了在多样化主题上预训练的通用模型外,这种方法还导致了像BloombergGPT这样了解金融的专业化模型,以及专注于医学的Med-PaLM 2。

对于大多数团队,我建议从提示开始,因为这可以让你快速地让应用程序工作起来。如果你对输出的质量不满意,可以逐渐尝试更复杂的技术。从一个少次提示开始,用少量示例。如果效果不够好,或许可以使用RAG(检索增强生成)来进一步改进提示,为LLM提供生成高质量输出所需的关键信息。如果这仍然不能提供你想要的性能,那么尝试微调——但这代表着显著更高的复杂性,可能需要数百或数千个更多的示例。为了深入理解这些选项,我强烈推荐由AWS和DeepLearning.AI创建的课程“生成式人工智能与大型语言模型”。

(趣事:DeepLearning.AI团队的一位成员一直在尝试微调Llama-2-7B,让它听起来像我。我想知道我是不是要失业了?😜)

如果你想在提示一个专有模型(如GPT-4)之后转向微调,这会增加额外的复杂性,而GPT-4并不适用于微调。微调一个更小的模型是否可能比提示一个更大、更有能力的模型得到更好的结果?答案往往取决于你的应用程序。如果你的目标是改变LLM输出的风格,那么微调一个更小的模型可以很好地工作。然而,如果你的应用程序一直在提示GPT-4进行复杂推理——在这方面GPT-4超过了当前的开放模型——那么微调一个更小的模型以提供更好的结果可能会很困难。

除了选择开发方法外,还需要选择一个特定的模型。更小的模型需要的处理能力较小,对许多应用程序来说效果很好,但更大的模型往往对世界有更多的了解和更好的推理能力。我将在未来的信件中讨论如何做出这个选择。

继续学习!

安德鲁```

model: qwen-1.8b-chat

summary: 关键点:

给预训练的LLM提供指令可以快速构建原型。

提示法在开始时效果最好,但随着示例数量的增加,微调法逐渐比提示法更好。

从提示法开始可以获得较好的结果,但需要逐步尝试更复杂的技术。

微调可能需要更多的示例,尤其是当目标是改变LLM输出的风格时。

选择合适的模型是选择开发方法的重要因素,更小的模型需要处理能力较小,但更大的模型往往对世界有更多的了解和更好的推理能力。

model: qwen-7b-chat

summary: LLM模型可以用来构建应用程序,方法有提示、一次性或少次提示、微调和预训练。作者建议从提示开始,因为这可以让你快速地让应用程序工作起来,如果对输出的质量不满意,可以逐渐尝试更复杂的技术。微调代表着显著更高的复杂性,可能需要数百或数千个更多的示例。如果你想在提示一个专有模型之后转向微调,这会增加额外的复杂性,而GPT-4并不适用于微调。

model: qwen-14b-chat

summary: 作者对LLM模型的看法是,越来越多的开源LLM模型为开发者提供了更多的选择来构建应用程序。作者建议从提示开始,因为这可以让你快速地让应用程序工作起来。如果效果不够好,可以逐渐尝试更复杂的技术,如微调。选择开发方法和模型时需要考虑处理能力、效果和推理能力等因素。

model: qwen-72b-chat

summary: 这封电子邮件讨论了大型语言模型(LLMs)的使用和开发方法,作者安德鲁提出了一个从低成本到高成本/复杂性的技术梯度:

1. 提示(Prompting):使用预训练的LLM,通过提供指令快速构建原型。

2. 一次性或少次提示(One-shot or few-shot prompting):添加少量示例以改进结果。

3. 微调(Fine-tuning):在小数据集上进一步训练预训练的LLM,需要更多资源和复杂性。

4. 预训练(Pre-training):从头开始训练LLM,适合专业领域模型,如BloombergGPT和Med-PaLM 2。

建议大多数团队从提示开始,根据需要逐步尝试更复杂的技术。作者还提到了微调一个Llama-2-7B模型以模仿他的写作风格,并讨论了微调小型模型与提示大型模型之间的权衡,这取决于具体的应用场景。未来的通信将涉及选择合适模型的大小和能力。

关键点:

LLMs的开源趋势提供了更多开发选项。

提示是快速构建应用程序的简单方法,微调增加复杂性但可能提高性能。

选择模型大小与应用需求相关,更大的模型可能有更强的推理能力。

微调小型模型可能改变输出风格,但在复杂推理任务上可能不如大型模型。

- qwen-72b-chat ``` 评估:

- qwen-1.8b-chat: 摘要简洁,但可能过于简化了原文中的复杂信息,特别是关于微调和提示之间的权衡。它没有提及GPT-4不适用于微调这一点。

- qwen-7b-chat: 摘要提供了更详细的信息,但仍然没有涵盖所有关键点,例如预训练和特定模型的讨论。

- qwen-14b-chat: 摘要包含了更多的细节,如微调的复杂性和GPT-4的不适用性,但没有提及预训练模型的用途。

- qwen-72b-chat: 摘要全面地概述了原文内容,包括技术梯度、微调的复杂性、预训练模型的用途以及模型选择的考虑因素。

比较:

qwen-1.8b-chat 和 qwen-7b-chat 的摘要过于简略,可能不足以传达原文的全部信息。 qwen-14b-chat 的摘要稍好,但仍然遗漏了一些关键点。 qwen-72b-chat 的摘要最全面,准确地捕捉了原文的主要观点。

推荐:

根据评估,qwen-72b-chat 模型生成的摘要最能准确、全面地总结原始文本,遵循了提示的指令,并提供了丰富的信息。因此,推荐使用 qwen-72b-chat 模型。

- qwen-max

qwen-1.8b-chat

总结质量:较好地概述了邮件的主要内容,包括LLM开发方法及其优缺点、微调所需的条件以及模型选择的重要性。但关于微调与提示法之间关系的描述稍显模糊,没有准确表达出微调是在提示效果不理想时的一种进阶策略。 遵循指令:基本遵循了概括邮件内容的指令,但部分细节表述不够清晰准确。 其他特征:无明显特殊或有趣特征。

qwen-7b-chat

总结质量:简明扼要地列出了LLM开发方法及其适用情况,提及了微调的复杂性和对GPT-4微调的局限性。不过,缺少对模型选择重要性的阐述。 遵循指令:遵循了概括邮件内容的指令,但略去了部分内容(如模型选择的影响因素)。 其他特征:无明显特殊或有趣特征。

qwen-14b-chat

总结质量:概述了LLM的开源趋势、开发方法的选择以及选择模型时需考虑的因素,但未详细说明各种开发方法的特点、适用场景及转换过程,且未涉及微调小型模型与提示大型模型的对比。 遵循指令:基本遵循了概括邮件内容的指令,但内容深度和全面性不足。 其他特征:无明显特殊或有趣特征。

qwen-72b-chat

总结质量:详细、准确地总结了原始邮件的内容,包括LLM的开发方法、不同方法的成本/复杂性、推荐的开发路径、微调注意事项、模型选择的影响因素等。同时,清晰地阐述了微调与提示的关系以及针对不同应用场景选择模型的考量。 遵循指令:完美地遵循了概括邮件内容的指令,完整且条理清晰地呈现了邮件的所有关键信息。 其他特征:结构清晰,按照原文逻辑层次逐项列举要点,便于读者快速理解。

模型比较与推荐

综合以上评估,qwen-72b-chat模型在总结质量、遵循指令以及输出结构方面均表现出色,其摘要不仅准确传达了原始邮件的核心信息,而且结构清晰、逻辑连贯,易于读者理解和吸收。相比之下,其他模型的摘要在内容完整性、准确度或结构组织上存在不同程度的欠缺。因此,推荐使用qwen-72b-chat模型作为本次任务的最佳模型。

- qwen-plus

评估:

- qwen-1.8b-chat:

- 是的,它很好地总结了文本,但略显简略,没有提及所有方法。

- 遵循了指令,但没有详细描述技术梯度。

- 输出简洁,但可能不够全面。

- qwen-7b-chat:

- 是的,它总结了文本的关键点,包括技术梯度和微调的复杂性。

- 遵循了指令,详细描述了从提示到微调的过程。

- 输出清晰,但没有提及预训练和模型选择的考虑。

- qwen-14b-chat:

- 是的,它总结了文本,包括LLM的选择和开发方法。

- 遵循了指令,提到了提示和微调,但没有深入技术梯度。

- 输出平衡,但没有提到预训练和处理能力的考虑。

- qwen-72b-chat:

- 是的,它详细总结了文本,包括所有方法和技术梯度。

- 遵循了指令,详细描述了各种技术,包括预训练和模型选择的考虑。

- 输出全面,但可能较长,适合更详细的分析。

推荐:

根据总结的全面性和遵循指令的程度,qwen-72b-chat 模型表现最好。它提供了最详细的信息,包括所有开发方法、技术梯度、预训练的讨论以及模型选择的考虑。虽然输出较长,但其详细性使其成为最佳选择,特别是如果目标是提供一个全面的概述。

- qwen-turbo

评估:

- model:qwen-1.8b-chat:

- 摘要总结了关键点,但没有深入讨论微调和预训练的不同,以及微调GPT-4的复杂性。

- 指令遵循,但内容略显简略,没有详细说明每个步骤的优缺点。

- 有趣特征:提及了微调Llama-2-7B的趣事,但没有深入分析。

- model:qwen-7b-chat:

- 摘要涵盖了主要方法和建议,但没有提及微调Llama-2-7B的细节。

- 指令遵循,但内容与7b版本相比可能略显基础。

- 有趣特征:提及了微调和GPT-4的复杂性对比。

- model:qwen-14b-chat:

- 摘要更全面,包括了处理能力和推理能力的考虑,以及不同模型大小的选择。

- 指令遵循,提供了更深入的分析。

- 有趣特征:强调了场景选择的重要性。

- model:qwen-72b-chat:

- 摘要是最详尽的,涵盖了所有关键点,包括预训练模型的专长领域和微调的权衡。

- 指令完全遵循,提供了丰富的细节和实例。

- 有趣特征:展示了微调的复杂性和选择模型大小的实际应用。 根据评估,model:qwen-72b-chat表现最好,因为它提供了最全面、深入的讨论,涵盖了所有关键点,并且对不同方法的优缺点有清晰的分析。此外,它还提供了具体的实例和场景选择的建议,使得指导更具实用价值。 ```

- kimi

```

根据提供的原始文本email和几个模型的名称:model:

,以及这些模型生成的摘要:summary,以下是对每个模型摘要的评估:

- qwen-1.8b-chat模型:

摘要很好地总结了原始文本的核心内容,突出了使用LLMs构建应用程序的不同方法,包括提示、微调和预训练。 遵循了提示的指令,提供了对原始邮件内容的简洁概括。 输出中没有提供额外的信息或见解,但也没有遗漏关键信息。

- qwen-7b-chat模型:

摘要较为详细地概述了邮件内容,包括了开发方法的建议和不同方法之间的复杂性比较。 遵循了提示的指令,并且提供了关于如何根据应用程序需求选择合适的开发方法的有用信息。 输出中的“趣事”部分增加了一些额外的背景信息,使得摘要更加生动。

- qwen-14b-chat模型:

摘要提供了一个清晰的概述,强调了从提示开始的建议,并提到了微调的复杂性。 遵循了提示的指令,但没有提供关于每种方法的具体细节。 输出简洁,但没有提供额外的见解或背景信息。

- qwen-72b-chat模型:

摘要非常详细,不仅总结了邮件的关键点,还提供了关于每种方法的深入分析。 遵循了提示的指令,并且在解释每种方法时提供了额外的背景和建议。 输出中包含了所有关键点,并讨论了微调小型模型与提示大型模型之间的权衡,这为读者提供了更全面的视角。

比较和推荐:

qwen-72b-chat模型提供了最全面和详细的摘要,不仅总结了邮件的关键内容,还增加了对每种方法的深入分析和建议,这可能对读者在选择开发方法时提供最大的帮助。 qwen-7b-chat模型的摘要虽然详细,但相较于qwen-72b-chat模型,它在提供背景信息和建议方面略显不足。 qwen-1.8b-chat模型和qwen-14b-chat模型的摘要较为简洁,适合需要快速了解邮件内容的读者,但在深度和细节上不如qwen-72b-chat模型。

推荐:根据评估,qwen-72b-chat模型的表现最佳,因为它提供了最全面和详细的摘要,有助于读者更好地理解和应用邮件中的内容。

- ChatGPT-3.5

评估每个模型的摘要:

qwen-1.8b-chat:

总结了原始文本的主要要点,包括不同的开发方法和它们的复杂性递增顺序。 遵循了指令,并提供了对每种方法的简明解释。 摘要提供了简洁的信息,没有额外的有趣特征。

qwen-7b-chat:

总结了原始文本的要点,包括不同的开发方法和从简单到复杂的递进顺序。 也遵循了指令,并提供了对每种方法的简明解释。 没有额外的有趣特征,但与qwen-1.8b-chat提供了相似的信息。

qwen-14b-chat:

总结了原始文本的关键内容,提到了LLMs的开源趋势和选择模型大小与应用需求相关的重要性。 也遵循了指令,并提供了对不同方法的解释。 与前两个摘要相比,没有额外的有趣特征,但更加强调了模型大小选择的重要性。

qwen-72b-chat:

总结了原始文本中的关键信息,包括不同的开发方法和其递增的复杂性。 遵循了指令,并提供了详细的解释。 与其他模型相比,提到了一个有趣的细节,即一个成员正在尝试微调Llama-2-7B模型以模仿作者的写作风格,并且更加详细地强调了选择模型大小的重要性。 基于评估,qwen-72b-chat模型提供了更详细和全面的摘要,同时还提到了一个有趣的特征。因此,我会推荐使用qwen-72b-chat模型。

### Task 3: Reasoning(推理)

- Compare the three models' performance on reasoning tasks.(比较三个模型在推理任务上的表现。)

```python

context = """

Jeff and Tommy are neighbors

Tommy and Eddy are not neighbors

"""

query = """

Are Jeff and Eddy neighbors?

"""

prompt = f"""

Given this context: ```{context}```,

and the following query:

```{query}```

Please answer the questions in the query and explain your reasoning.

If there is not enough informaton to answer, please say

"I do not have enough information to answer this questions."

"""

中文

context = """

杰夫和汤米是邻居

汤米和艾迪不是邻居

"""

query = """

杰夫和艾迪是邻居吗?

"""

prompt = f"""

根据这个上下文: ```{context}```,

和下面的查询:

```{query}```

请回答查询中的问题并解释你的推理。

如果信息不足以回答问题,请说:“我没有足够的信息来回答问题。”

"""

- llama-2-7b-chat ``` Sure, I’d be happy to help! Based on the information provided, we can answer the query as follows:

Are Jeff and Eddy neighbors?

No, Jeff and Eddy are not neighbors.

Reasoning:

- Jeff and Tommy are neighbors (given)

- Tommy and Eddy are not neighbors (given)

So, Jeff and Eddy are not neighbors because they are not in a direct relationship (neighborhood) with each other.

当然,我很乐意帮助!根据提供的信息,我们可以如下回答查询:

Jeff和Eddy是邻居吗?

不,Jeff和Eddy不是邻居。

推理:

Jeff和Tommy是邻居(已知) Tommy和Eddy不是邻居(已知) 所以,Jeff和Eddy不是邻居,因为他们之间没有直接的关系(邻里关系)。

- llama-2-13b-chat

根据提供的信息,我无法回答“Jeff和Eddy是邻居吗?”这个问题,因为没有关于Jeff和Eddy之间关系的信息。唯一提供的信息是Tommy和Eddy不是邻居,但没有关于Jeff与Tommy或Eddy的关系的信息。因此,我无法确定Jeff和Eddy是否是邻居。

- llama-2-70b-chat

不,Jeff和Eddy不是邻居。

给出的信息表明Jeff和Tommy是邻居,而Tommy和Eddy不是邻居。由于Tommy不是Eddy的邻居,这意味着Eddy不是Tommy的邻居。因此,作为Tommy邻居的Jeff,不可能是Eddy的邻居。

#### Model-Graded Evaluation: Reasoning

- Again, ask a LLM to compare the three responses.

- Create a `prompt` that will evaluate these three responses using 70B parameter chat model (`llama-2-70b-chat`).

- In the `prompt`, provide the `context`, `query`,"name of the models", and the "response" generated by each model.

```python

prompt = f"""

Given the context `context:`,

Also also given the query (the task): `query:`

and given the name of several models: `mode:<name of model>,

as well as the response generated by that model: `response:`

Provide an evaluation of each model's response:

- Does it answer the query accurately?

- Does it provide a contradictory response?

- Are there any other interesting characteristics of the model's output?

Then compare the models based on their evaluation \

and recommend the models that perform the best.

context: ```{context}```

model: llama-2-7b-chat

response: ```{response_7b_chat}```

model: llama-2-13b-chat

response: ```{response_13b_chat}```

model: llama-2-70b-chat

response: ``{response_70b_chat}```

"""

中文

prompt = f"""

在给定上下文 `context:` 的情况下,同时给定查询(任务):`query:`,以及几个模型的名称:`model:<model name>`,还有这些模型生成的响应:`response:`。

请提供对每个模型响应的评估:

它是否准确回答了查询?

它是否提供了矛盾的响应?

模型输出是否有任何其他有趣的特征?

然后根据评估比较模型,并推荐表现最好的模型。

context: ```{context}```

model: llama-2-7b-chat

response: ```{response_7b_chat}```

model: llama-2-13b-chat

response: ```{response_13b_chat}```

model: llama-2-70b-chat

response: ``{response_70b_chat}```

"""

response_eval = llama(prompt, model="togethercomputer/llama-2-70b-chat")

print(response_eval)

Evaluation of each model's response:

1. llama-2-7b-chat:

* Accuracy: The model's response accurately answers the query by stating that Jeff and Eddy are not neighbors.

* Contradictory response: No, the response does not provide a contradictory answer.

* Other characteristics: The model's output provides a clear and concise explanation of the reasoning behind the answer, citing the given information and using logical deduction.

2. llama-2-13b-chat:

* Accuracy: The model's response does not provide an answer to the query, stating that there is not enough information to determine whether Jeff and Eddy are neighbors.

* Contradictory response: No, the response does not provide a contradictory answer.

* Other characteristics: The model's output acknowledges the limitations of the given information and does not make an incorrect assumption or provide a misleading answer.

3. llama-2-70b-chat:

* Accuracy: The model's response accurately states that Jeff and Eddy are not neighbors.

* Contradictory response: No, the response does not provide a contradictory answer.

* Other characteristics: The model's output provides a brief and straightforward answer without elaborating on the reasoning behind it.

Comparison of the models based on their evaluation:

1. llama-2-7b-chat: The model provides an accurate answer and a clear explanation of the reasoning behind it. It effectively uses the given information to deduce the answer.

2. llama-2-70b-chat: The model also provides an accurate answer, but its output is less detailed and does not offer an explanation.

3. llama-2-13b-chat: The model does not provide an answer, stating that there is not enough information. While it acknowledges the limitations of the given information, it does not offer any insight or deduction.

Recommendation:

Based on their performance in answering the query accurately and providing informative responses, llama-2-7b-chat and llama-2-70b-chat are the top-performing models. Therefore, the recommendation is to use either of these models for tasks that require deducing relationships between entities based on given information.

中文

根据提供的信息,我可以对每个模型的响应进行如下评估:

llama-2-7b-chat模型:

准确性:该模型的响应准确地回答了查询,明确指出Jeff和Eddy不是邻居。

矛盾响应:不,响应没有提供矛盾的答案。

其他特征:该模型的输出提供了清晰而简洁的解释,阐述了答案背后的推理过程,引用了给定的信息并使用了逻辑推理。

llama-2-13b-chat模型:

准确性:该模型的响应没有提供查询的答案,声称没有足够的信息来确定Jeff和Eddy是否是邻居。

矛盾响应:不,响应没有提供矛盾的答案。

其他特征:该模型的输出承认了给定信息的局限性,没有做出错误的假设或提供误导性的答案。

llama-2-70b-chat模型:

准确性:该模型的响应准确地指出Jeff和Eddy不是邻居。

矛盾响应:不,响应没有提供矛盾的答案。

其他特征:该模型的输出提供了简短而直接的答案,没有详细阐述其背后的原因。

基于评估的模型比较:

llama-2-7b-chat模型:该模型提供了准确的答案,并清晰地解释了其推理过程。它有效地使用给定的信息来推断答案。

llama-2-70b-chat模型:该模型也提供了准确的答案,但其输出不够详细,没有提供解释。

llama-2-13b-chat模型:该模型没有提供答案,声称没有足够的信息。虽然它承认了给定信息的局限性,但没有提供任何见解或推断。

推荐:

根据它们在准确回答问题和提供信息性响应方面的表现,llama-2-7b-chat模型和llama-2-70b-chat模型是表现最好的模型。因此,建议在需要根据给定信息推断实体间关系的任务中使用这两个模型中的任何一个。

LLM qwen 评估

提示

根据这个上下文: ```杰夫和汤米是邻居

汤米和艾迪不是邻居```,

和下面的查询:

```杰夫和艾迪是邻居吗?```

请回答查询中的问题并解释你的推理。

如果信息不足以回答问题,请说:“我没有足够的信息来回答问题。”

- qwen-1.8b-chat ``` 根据提供的上下文,我们可以得出以下推理:

杰夫和汤米是邻居,这意味着他们住在同一栋房子里。 汤米和艾迪不是邻居,这意味着他们住在不同的房子或者不在同一栋房子里。 因此,根据提供的信息,我们无法确定杰夫和艾迪是否是邻居。

- qwen-7b-chat

杰夫和艾迪不是邻居。推理是基于给出的上下文,即杰夫和汤米是邻居,而汤米和艾迪不是邻居。因此,杰夫和艾迪不可能是邻居。

- qwen-14b-chat

根据提供的信息,我们无法确定杰夫和艾迪是否是邻居。因为虽然我们知道杰夫和汤米是邻居,但汤米和艾迪是否是邻居我们并不清楚。因此,我无法回答这个问题。

- qwen-72b-chat

根据给出的上下文,我们知道杰夫和汤米是邻居,但汤米和艾迪不是邻居。然而,上下文中没有提供关于杰夫和艾迪是否是邻居的信息。因此,我没有足够的信息来回答问题。

在给定上下文 context: 的情况下,同时给定查询(任务):query:,以及几个模型的名称:model:<model name>,还有这些模型生成的响应:response:。

请提供对每个模型响应的评估:

它是否准确回答了查询? 它是否提供了矛盾的响应? 模型输出是否有任何其他有趣的特征? 然后根据评估比较模型,并推荐表现最好的模型。

context: ```杰夫和汤米是邻居

汤米和艾迪不是邻居```

query: 杰夫和艾迪是邻居吗?

model: qwen-1.8b-chat response: ```根据提供的上下文,我们可以得出以下推理:

杰夫和汤米是邻居,这意味着他们住在同一栋房子里。 汤米和艾迪不是邻居,这意味着他们住在不同的房子或者不在同一栋房子里。 因此,根据提供的信息,我们无法确定杰夫和艾迪是否是邻居。```

model: qwen-7b-chat

response: 杰夫和艾迪不是邻居。推理是基于给出的上下文,即杰夫和汤米是邻居,而汤米和艾迪不是邻居。因此,杰夫和艾迪不可能是邻居。

model: qwen-14b-chat

response: 根据提供的信息,我们无法确定杰夫和艾迪是否是邻居。因为虽然我们知道杰夫和汤米是邻居,但汤米和艾迪是否是邻居我们并不清楚。因此,我无法回答这个问题。

model: qwen-72b-chat

response: 根据给出的上下文,我们知道杰夫和汤米是邻居,但汤米和艾迪不是邻居。然而,上下文中没有提供关于杰夫和艾迪是否是邻居的信息。因此,我没有足够的信息来回答问题。`

**响应**

- qwen-72b-chat

评估:

- qwen-1.8b-chat: 这个模型提供了详细的推理,但结论是错误的。它错误地推断出我们无法确定杰夫和艾迪是否是邻居,而实际上根据上下文,我们知道他们不是邻居。

- qwen-7b-chat: 这个模型准确回答了查询,没有提供矛盾的信息,并基于上下文进行了正确的推理。

- qwen-14b-chat: 这个模型的响应与1.8b模型类似,错误地表示无法确定,而实际上可以确定他们不是邻居。

- qwen-72b-chat: 这个模型的响应与7b模型类似,准确地表达了我们无法从上下文中获取杰夫和艾迪是否是邻居的信息,但实际上可以确定他们不是。

比较模型,qwen-7b-chat 和 qwen-72b-chat 都给出了正确的推理,但qwen-7b-chat更直接地回答了问题。因此,推荐qwen-7b-chat作为表现最好的模型。

- 通义千问

评估:

qwen-1.8b-chat

- 是否准确回答了查询:是

- 是否提供了矛盾的响应:否

- 其他有趣特征:该模型的响应详细解释了推理过程,从已知条件逐步推导出结论,即无法确定杰夫和艾迪是否是邻居。这种详细的解释有助于用户理解推理逻辑,具有较高的透明度。

qwen-7b-chat

- 是否准确回答了查询:否

- 是否提供了矛盾的响应:是

- 其他有趣特征:该模型直接断言杰夫和艾迪不是邻居,这是基于错误的推理。它假设杰夫和艾迪是否为邻居与汤米是否同时与两者相邻有直接关联,但实际上,杰夫和艾迪可能通过其他途径(如分别与汤米相邻的不同邻居)成为邻居。这个错误反映了模型在处理逻辑关系时可能存在局限性。

qwen-14b-chat

- 是否准确回答了查询:是

- 是否提供了矛盾的响应:否

- 其他有趣特征:该模型的回答与qwen-1.8b-chat类似,指出无法确定杰夫和艾迪的关系,但表述更为简洁,没有详细阐述推理过程。尽管如此,其回答仍然清晰且无矛盾。

qwen-72b-chat

- 是否准确回答了查询:是

- 是否提供了矛盾的响应:否

- 其他有趣特征:该模型的回答内容与qwen-1.8b-chat和qwen-14b-chat一致,强调由于缺乏直接信息,无法确定杰夫和艾迪是否是邻居。其表述方式与前两者相似,没有提供额外的推理细节。

比较与推荐:

所有模型中,qwen-1.8b-chat 和 qwen-14b-chat 以及 qwen-72b-chat 都准确回答了查询,即无法确定杰夫和艾迪是否是邻居,且它们的回答均不存在矛盾。相比之下:

- qwen-7b-chat 提供了错误的结论,表明其在处理此类逻辑推理问题上存在缺陷。

- qwen-1.8b-chat 在回答中详细展示了推理步骤,有助于用户理解其结论的来源,具有较高的教育性和透明度。

- qwen-14b-chat 和 qwen-72b-chat 虽然回答准确,但表述较为简洁,未提供详尽的推理过程。

综合考虑准确性、无矛盾性以及回答的教育价值,我推荐qwen-1.8b-chat作为表现最好的模型,因其不仅准确回答了查询,还清晰地展现了推理逻辑,有助于用户理解和接受其结论。

- kimi

根据给定的上下文 context:,查询 query:,以及几个模型的名称 model:<model name>,以下是对这些模型响应的评估:

- qwen-1.8b-chat模型:

- 它是否准确回答了查询?没有。该模型没有准确回答查询,因为它错误地推断了杰夫和艾迪不可能是邻居,这是基于错误的假设,即邻居关系是直接传递的。

- 它是否提供了矛盾的响应?没有。模型的输出是一致的,但它的推理是错误的。

- 模型输出是否有任何其他有趣的特征?输出尝试通过解释来推理,但逻辑是错误的。

- qwen-7b-chat模型:

- 它是否准确回答了查询?是。该模型正确地指出了杰夫和艾迪不是邻居。

- 它是否提供了矛盾的响应?没有。模型的输出是一致的。

- 模型输出是否有任何其他有趣的特征?输出简洁明了,直接给出了结论。

- qwen-14b-chat模型:

- 它是否准确回答了查询?没有。该模型声称没有足够的信息来回答问题,这是不正确的,因为根据给定的信息可以得出结论。

- 它是否提供了矛盾的响应?没有。模型的输出是一致的,但它没有利用给定的信息。

- 模型输出是否有任何其他有趣的特征?输出表现出了谨慎,但没有正确地应用逻辑推理。

- qwen-72b-chat模型:

- 它是否准确回答了查询?没有。与qwen-14b-chat模型类似,它错误地声称没有足够的信息。

- 它是否提供了矛盾的响应?没有。模型的输出是一致的,但它同样没有利用给定的信息。

- 模型输出是否有任何其他有趣的特征?输出表达了对信息的不确定性,但没有进行必要的推理。

比较和推荐:

- qwen-7b-chat模型提供了最准确的回答,因为它直接并正确地根据给定的信息得出了结论。

- qwen-1.8b-chat模型尝试进行推理,但得出了错误的结论。

- qwen-14b-chat模型和qwen-72b-chat模型都没有利用给定的信息来回答问题,而是声称信息不足。

推荐:根据它们的性能,qwen-7b-chat模型是表现最好的,因为它准确地利用了给定的信息来回答查询。其他模型要么错误地推理,要么没有充分利用可用的信息。因此,对于需要准确推理和回答的任务,推荐使用qwen-7b-chat模型。

- ChatGPT-3.5

评估每个模型的响应:

qwen-1.8b-chat:

- 响应提供了合理的推理,说明了杰夫和汤米的邻居关系以及汤米和艾迪的情况。

- 在回答查询时,模型指出,根据提供的信息无法确定杰夫和艾迪是否是邻居,这是合理的。

- 没有提供矛盾的响应,而且对上下文进行了适当的分析。

- 没有其他特别引人注目的特征。

qwen-7b-chat:

- 响应指出,根据上下文中提供的信息,杰夫和艾迪不是邻居。

- 在回答查询时,模型提供了清晰而直接的答案,表明杰夫和艾迪不可能是邻居。

- 没有提供矛盾的响应,与上下文一致。

- 没有其他特别引人注目的特征。

qwen-14b-chat:

- 响应表明,根据提供的信息无法确定杰夫和艾迪是否是邻居,与上下文的分析一致。

- 模型清楚地指出了信息的不足以得出关于杰夫和艾迪是否是邻居的结论。

- 没有提供矛盾的响应,而且对上下文的理解是合理的。

- 没有其他特别引人注目的特征。

qwen-72b-chat:

- 响应指出,根据上下文,杰夫和汤米是邻居,但上下文中未提及杰夫和艾迪的关系。

- 模型清晰地表达了自己没有足够的信息来回答查询。

- 没有提供矛盾的响应,而且对上下文的分析是合理的。

- 没有其他特别引人注目的特征。

综合评估,所有模型都提供了相似水平的准确性和一致性。它们都正确地表明根据提供的上下文,无法确定杰夫和艾迪是否是邻居。因此,选择最佳模型取决于其他因素,例如表达风格或输出的详细程度。在这种情况下,由于所有模型都提供了类似的准确性和一致性,我会推荐选择最简洁和直接的响应,即qwen-7b-chat模型的响应。

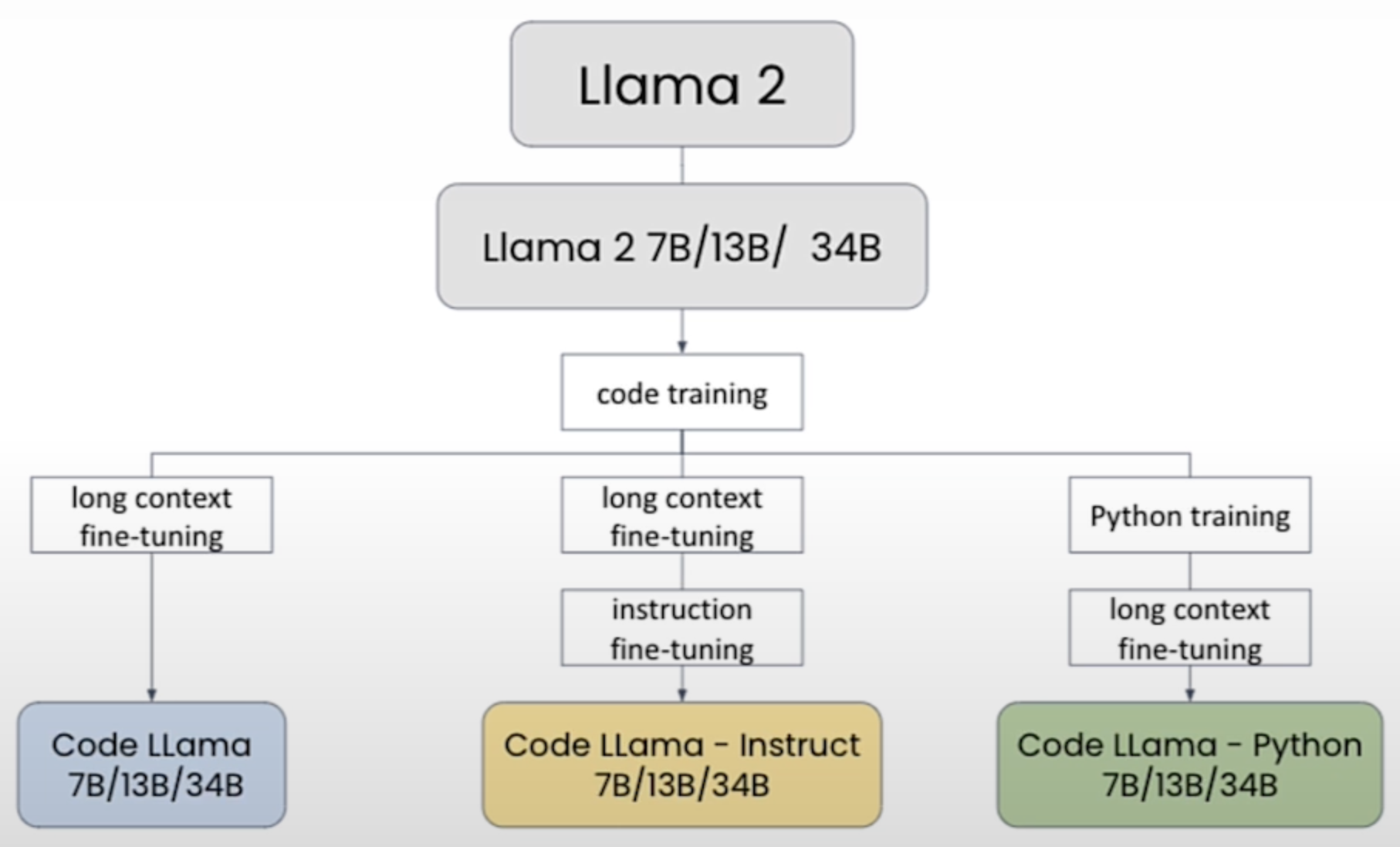

## Code Llama

这里是 Together.ai 提供的 Code Llama 模型的名称:

- ```togethercomputer/CodeLlama-7b```

- ```togethercomputer/CodeLlama-13b```

- ```togethercomputer/CodeLlama-34b```

- ```togethercomputer/CodeLlama-7b-Python```

- ```togethercomputer/CodeLlama-13b-Python```

- ```togethercomputer/CodeLlama-34b-Python```

- ```togethercomputer/CodeLlama-7b-Instruct```

- ```togethercomputer/CodeLlama-13b-Instruct```

- ```togethercomputer/CodeLlama-34b-Instruct```

### Writing code to solve a math problem (编写代码解决数学问题)

Lists of daily minimum and maximum temperatures:

```python

temp_min = [42, 52, 47, 47, 53, 48, 47, 53, 55, 56, 57, 50, 48, 45]

temp_max = [55, 57, 59, 59, 58, 62, 65, 65, 64, 63, 60, 60, 62, 62]

prompt = f"""

Below is the 14 day temperature forecast in fahrenheit degree:

14-day low temperatures: {temp_min}

14-day high temperatures: {temp_max}

Which day has the lowest temperature?

"""

response = llama(prompt)

print(response)

-

llama-2-7b-chat

Based on the temperature forecast you provided, the day with the lowest temperature is Day 7, with a low temperature of 47°F (8.3°C). (根据您提供的温度预报,温度最低的一天是第7天,最低温度为47°F(8.3°C)。)

-

llama-2-13b-chat

To find the day with the lowest temperature, we need to look at the list of low temperatures. The lowest temperature in the list is 42°F, which occurs on day 1. (要找到温度最低的一天,我们需要查看最低温度的列表。列表中最低的温度是42°F,出现在第1天。)

-

llama-2-70b-chat

The lowest temperature in the 14-day forecast is 42°F, which is expected on the first day. (14天预报中的最低温度为42°F,预计在第一天出现。)

中文

prompt = f"""

以下是14天的华氏度温度预报:

14天的最低温度:[42, 52, 47, 47, 53, 48, 47, 53, 55, 56, 57, 50, 48, 45]

14天的最高温度:[55, 57, 59, 59, 58, 62, 65, 65, 64, 63, 60, 60, 62, 62]

哪一天的温度最低?

"""

- qwen-1.8b-chat

最低温度出现在第14天,即12月15日,华氏度为47度。

- qwen-7b-chat

温度最低的那一天是42°F。

- qwen-14b-chat

最低温度出现在第14天,即45华氏度。

- qwen-72b-chat

根据提供的数据,14天中的最低温度分别为: [42, 52, 47, 47, 53, 48, 47, 53, 55, 56, 57, 50, 48, 45] 最低温度出现在第1天,为42华氏度。

- ChatGPT-3.5

为了找出哪一天的温度最低,我们需要比较最低温度列表中的值,并找到最小值所对应的索引,然后使用该索引在日期列表中找到相应的日期。以下是解决这个问题的Python代码:

# 14天的最低温度

lowest_temperatures = [42, 52, 47, 47, 53, 48, 47, 53, 55, 56, 57, 50, 48, 45]

# 对应的日期列表(假设从1号到14号)

dates = list(range(1, 15))

# 找到最低温度的最小值及其索引

min_temperature = min(lowest_temperatures)

min_index = lowest_temperatures.index(min_temperature)

# 使用索引找到对应的日期

coldest_day = dates[min_index]

print("最低温度出现在第{}天,最低温度为{}华氏度。".format(coldest_day, min_temperature))

这段代码将找到最低温度及其对应的日期,并输出结果。

问法二:直接给答案。 ❌

以下是14天的华氏度温度预报: 14天的最低温度:[42, 52, 47, 47, 53, 48, 47, 53, 55, 56, 57, 50, 48, 45] 14天的最高温度:[55, 57, 59, 59, 58, 62, 65, 65, 64, 63, 60, 60, 62, 62] 哪一天的温度最低?温度是多少?直接给答案。

第14天的温度最低,为45华氏度。

- kimi

根据提供的14天最低温度和最高温度数据,我们可以通过比较每天的最低温度来确定哪一天的温度最低。

最低温度数据如下:

[42, 52, 47, 47, 53, 48, 47, 53, 55, 56, 57, 50, 48, 45]

通过比较这些数值,我们可以看到最低温度是42华氏度。这个温度出现在预报期的第一天。因此,可以得出结论,在提供的14天的温度预报中,第一天的温度最低,为42华氏度。

问法二:直接给答案。 ❌

以下是14天的华氏度温度预报: 14天的最低温度:[42, 52, 47, 47, 53, 48, 47, 53, 55, 56, 57, 50, 48, 45] 14天的最高温度:[55, 57, 59, 59, 58, 62, 65, 65, 64, 63, 60, 60, 62, 62] 哪一天的温度最低?温度是多少?直接给答案。

哪一天的温度最低是第14天,温度是45华氏度。

- 通义千问

问法一:

以下是14天的华氏度温度预报: 14天的最低温度:[42, 52, 47, 47, 53, 48, 47, 53, 55, 56, 57, 50, 48, 45] 14天的最高温度:[55, 57, 59, 59, 58, 62, 65, 65, 64, 63, 60, 60, 62, 62] 哪一天的温度最低?温度是多少?

给出的14天华氏度温度预报中,包括了每天的最低温度和最高温度。要找出哪一天的温度最低,我们只需直接从最低温度列表中找到数值最小的那个即可。